Valid DP-203 Dumps shared by PassLeader for Helping Passing DP-203 Exam! PassLeader now offer the newest DP-203 VCE dumps and DP-203 PDF dumps, the PassLeader DP-203 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-203 dumps with VCE and PDF here: https://www.passleader.com/dp-203.html (246 Q&As Dumps –> 397 Q&As Dumps –> 428 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-203 dumps from Cloud Storage: https://drive.google.com/drive/folders/1wVv0mD76twXncB9uqhbqcNPWhkOeJY0s

NEW QUESTION 230

You have an Azure Data Lake Storage account that contains a staging zone. You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You schedule an Azure Databricks job that executes an R notebook, and then inserts the data into the data warehouse.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

Must use an Azure Data Factory, not an Azure Databricks job.

https://docs.microsoft.com/en-US/azure/data-factory/transform-data

NEW QUESTION 231

A company is planning on creating an Azure SQL database to support a mission critical application. The application needs to be highly available and not have any performance degradation during maintenance windows. Which of the following technologies can be used to implement this solution? (Choose three.)

A. Premium Service Tier

B. Virtual Machine Scale Sets

C. Basic Service Tier

D. SQL Data Sync

E. Always On Availability Groups

F. Zone-redundant configuration

Answer: AEF

NEW QUESTION 232

A company has a SaaS solution that uses Azure SQL Database with elastic pools. The solution contains a dedicated database for each customer organization. Customer organizations have peak usage at different periods during the year. You need to implement the Azure SQL Database elastic pool to minimize cost. Which option or options should you configure?

A. Number of transactions only.

B. eDTUs per database only.

C. Number of databases only.

D. CPU usage only.

E. eDTUs and max data size.

Answer: E

NEW QUESTION 233

An in-house team is developing a new application. The design document specifies that data should be represented using nodes and relationships in graph structures. Individual data elements are relatively small. You need to recommend an appropriate data storage solution. Which solution should you recommend?

A. Azure Storage Blobs

B. Cosmos DB

C. Azure Data Lake Store

D. HBase in HDInsight

Answer: B

NEW QUESTION 234

Which offering provides scale-out parallel processing and dramatically accelerates performance of analytics clusters when integrated with the IBM Flash System?

A. IBM Cloud Object Storage

B. IBM Spectrum Accelerate

C. IBM Spectrum Scale

D. IBM Spectrum Connect

Answer: C

NEW QUESTION 235

A company manages several on-premises Microsoft SQL Server databases. You need to migrate the databases to Microsoft Azure by using a backup process of Microsoft SQL Server. Which data technology should you use?

A. Azure SQL Database single database.

B. Azure SQL Data Warehouse.

C. Azure Cosmos DB.

D. Azure SQL Database Managed Instance.

E. HDInsight Spark cluster.

Answer: D

NEW QUESTION 236

The data engineering team manages Azure HDInsight clusters. The team spends a large amount of time creating and destroying clusters daily because most of the data pipeline process runs in minutes. You need to implement a solution that deploys multiple HDInsight clusters with minimal effort. What should you implement?

A. Azure Databricks.

B. Azure Traffic Manager.

C. Azure Resource Manager templates.

D. Ambari web user interface.

Answer: C

NEW QUESTION 237

You have an Azure Synapse Analytics dedicated SQL pool named Pool1 and a database named DB1. DB1 contains a fact table named Table1. You need to identify the extent of the data skew in Table1. What should you do in Synapse Studio?

A. Connect to the built-in pool and query sysdm_pdw_sys_info.

B. Connect to Pool1 and run DBCC CHECKALLOC.

C. Connect to the built-in pool and run DBCC CHECKALLOC.

D. Connect to Pool! and query sys.dm_pdw_nodes_db_partition_stats.

Answer: D

Explanation:

Microsoft recommends use of sys.dm_pdw_nodes_db_partition_stats to analyze any skewness in the data.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/cheat-sheet

NEW QUESTION 238

You have an Azure subscription that contains an Azure Blob Storage account named storage1 and an Azure Synapse Analytics dedicated SQL pool named Pool1. You need to store data in storage1. The data will be read by Pool1. The solution must meet the following requirements:

– Enable Pool1 to skip columns and rows that are unnecessary in a query.

– Automatically create column statistics.

– Minimize the size of files.

Which type of file should you use?

A. JSON

B. Parquet

C. Avro

D. CSV

Answer: B

Explanation:

Automatic creation of statistics is turned on for Parquet files. For CSV files, you need to create statistics manually until automatic creation of CSV files statistics is supported.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-tables-statistics

NEW QUESTION 239

You have an enterprise data warehouse in Azure Synapse Analytics named DW1 on a server named Server1. You need to determine the size of the transaction log file for each distribution of DW1. What should you do?

A. On DW1, execute a query against the sys.database_files dynamic management view.

B. From Azure Monitor in the Azure portal, execute a query against the logs of DW1.

C. Execute a query against the logs of DW1 by using the Get-AzOperationalInsightsSearchResult PowerShell cmdlet.

D. On the master database, execute a query against the sys.dm_pdw_nodes_os_performance_counters dynamic management view.

Answer: A

Explanation:

For information about the current log file size, its maximum size, and the autogrow option for the file, you can also use the size, max_size, and growth columns for that log file in sys.database_files.

https://docs.microsoft.com/en-us/sql/relational-databases/logs/manage-the-size-of-the-transaction-log-file

NEW QUESTION 240

You are designing an anomaly detection solution for streaming data from an Azure IoT hub. The solution must meet the following requirements:

– Send the output to Azure Synapse.

– Identify spikes and dips in time series data.

– Minimize development and configuration effort.

Which should you include in the solution?

A. Azure Databricks

B. Azure Stream Analytics

C. Azure SQL Database

Answer: B

Explanation:

You can identify anomalies by routing data via IoT Hub to a built-in ML model in Azure Stream Analytics.

https://docs.microsoft.com/en-us/learn/modules/data-anomaly-detection-using-azure-iot-hub/

NEW QUESTION 241

A company uses Azure Stream Analytics to monitor devices. The company plans to double the number of devices that are monitored. You need to monitor a Stream Analytics job to ensure that there are enough processing resources to handle the additional load. Which metric should you monitor?

A. Early Input Events

B. Late Input Events

C. Watermark Delay

D. Input Deserialization Errors

Answer: C

Explanation:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-time-handling

NEW QUESTION 242

You have an activity in an Azure Data Factory pipeline. The activity calls a stored procedure in a data warehouse in Azure Synapse Analytics and runs daily. You need to verify the duration of the activity when it ran last. What should you use?

A. activity runs in Azure Monitor

B. activity log in Azure Synapse Analytics

C. the sys.dm_pdw_wait_stats data management view in Azure Synapse Analytics

D. an Azure Resource Manager template

Answer: A

Explanation:

https://docs.microsoft.com/en-us/azure/data-factory/monitor-visually

NEW QUESTION 243

You are designing database for an Azure Synapse Analytics dedicated SQL pool to support workloads for detecting ecommerce transaction fraud. Data will be combined from multiple ecommerce sites and can include sensitive financial information such as credit card numbers. You need to recommend a solution that meets the following requirements:

– Users must be able to identify potentially fraudulent transactions.

– Users must be able to use credit cards as a potential feature in models.

– Users must NOT be able to access the actual credit card numbers.

What should you include in the recommendation?

A. Transparent Data Encryption (TDE)

B. row-level security

C. column-level encryption

D. Azure Active Directory (Azure AD) pass-through authentication

Answer: C

Explanation:

Use Always Encrypted to secure the required columns. You can configure Always Encrypted for individual database columns containing your sensitive data. Always Encrypted is a feature designed to protect sensitive data, such as credit card numbers or national identification numbers (for example, U.S. social security numbers), stored in Azure SQL Database or SQL Server databases.

https://docs.microsoft.com/en-us/sql/relational-databases/security/encryption/always-encrypted-database-engine

NEW QUESTION 244

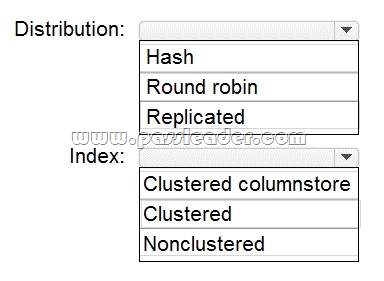

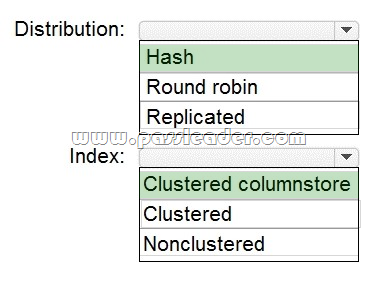

HotSpot

You are designing an enterprise data warehouse in Azure Synapse Analytics that will store website traffic analytics in a star schema. You plan to have a fact table for website visits. The table will be approximately 5 GB. You need to recommend which distribution type and index type to use for the table. The solution must provide the fastest query performance. What should you recommend? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

Box 1: Hash. Consider using a hash-distributed table when:

– The table size on disk is more than 2 GB.

– The table has frequent insert, update, and delete operations.

Box 2: Clustered columnstore. Clustered columnstore tables offer both the highest level of data compression and the best overall query performance.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-distribute

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-index

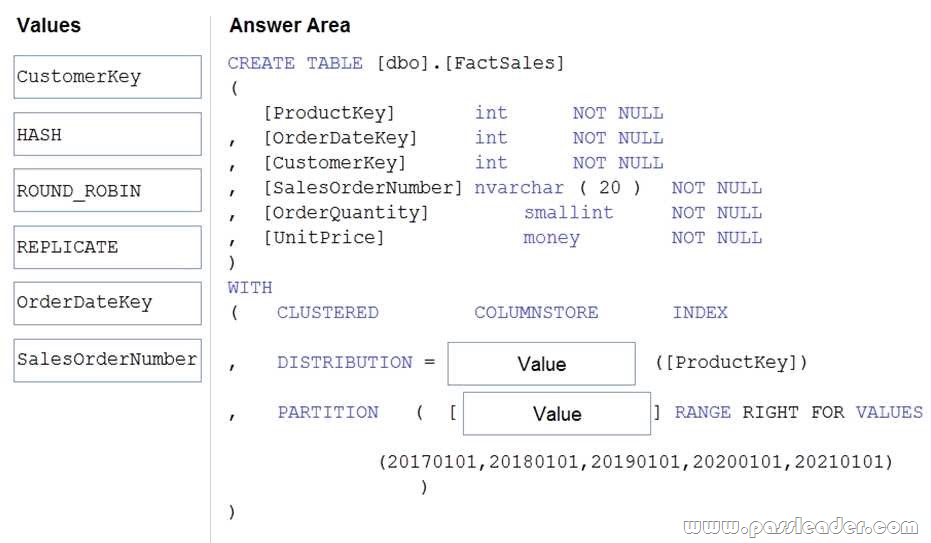

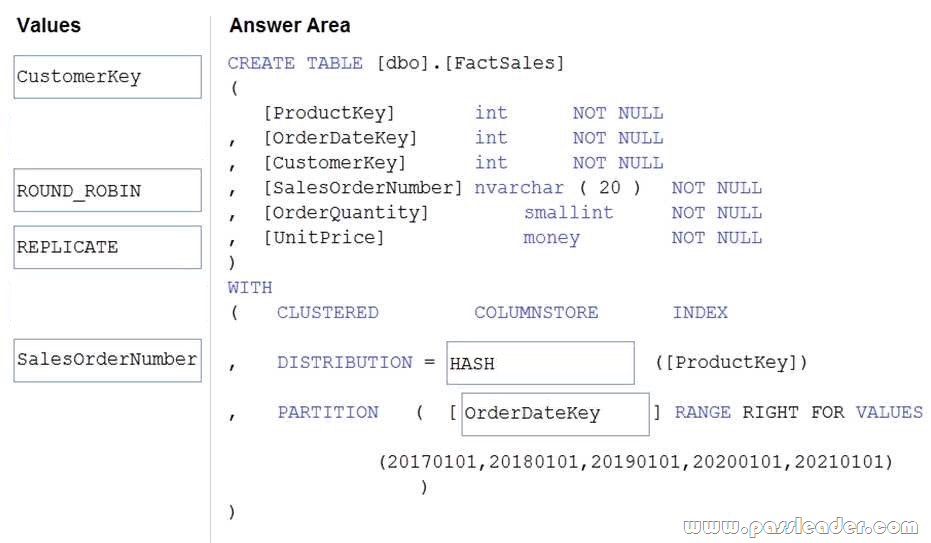

NEW QUESTION 245

Drag and Drop

You plan to create a table in an Azure Synapse Analytics dedicated SQL pool. Data in the table will be retained for five years. Once a year, data that is older than five years will be deleted. You need to ensure that the data is distributed evenly across partitions. The solution must minimize the amount of time required to delete old data. How should you complete the Transact-SQL statement? (To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.)

Answer:

Explanation:

Box 2: OrderDateKey. In most cases, table partitions are created on a date column. A way to eliminate rollbacks is to use Metadata Only operations like partition switching for data management. For example, rather than execute a DELETE statement to delete all rows in a table where the order_date was in October of 2001, you could partition your data early. Then you can switch out the partition with data for an empty partition from another table.

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-table-azure-sql-data-warehouse

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/best-practices-dedicated-sql-pool

NEW QUESTION 246

……

Get the newest PassLeader DP-203 VCE dumps here: https://www.passleader.com/dp-203.html (246 Q&As Dumps –> 397 Q&As Dumps –> 428 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-203 PDF dumps from Cloud Storage for free: https://drive.google.com/drive/folders/1wVv0mD76twXncB9uqhbqcNPWhkOeJY0s