Valid DP-100 Dumps shared by PassLeader for Helping Passing DP-100 Exam! PassLeader now offer the newest DP-100 VCE dumps and DP-100 PDF dumps, the PassLeader DP-100 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-100 dumps with VCE and PDF here: https://www.passleader.com/dp-100.html (315 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-100 dumps from Cloud Storage: https://drive.google.com/open?id=1f70QWrCCtvNby8oY6BYvrMS16IXuRiR2

NEW QUESTION 301

You use Azure Machine Learning to train a model based on a dataset named dataset1. You define a dataset monitor and create a dataset named dataset2 that contains new data. You need to compare dataset1 and dataset2 by using the Azure Machine Learning SDK for Python. Which method of the DataDriftDetector class should you use?

A. run

B. get

C. backfill

D. update

Answer: C

Explanation:

A backfill run is used to see how data changes over time.

https://docs.microsoft.com/en-us/python/api/azureml-datadrift/azureml.datadrift.datadriftdetector.datadriftdetector

NEW QUESTION 302

You use an Azure Machine Learning workspace. You have a trained model that must be deployed as a web service. Users must authenticate by using Azure Active Directory. What should you do?

A. Deploy the model to Azure Kubernetes Service (AKS). During deployment, set the token_auth_enabled parameter of the target configuration object to true.

B. Deploy the model to Azure Container Instances. During deployment, set the auth_enabled parameter of the target configuration object to true.

C. Deploy the model to Azure Container Instances. During deployment, set the token_auth_enabled parameter of the target configuration object to true.

D. Deploy the model to Azure Kubernetes Service (AKS). During deployment, set the auth.enabled parameter of the target configuration object to true.

Answer: A

Explanation:

To control token authentication, use the token_auth_enabled parameter when you create or update a deployment. Token authentication is disabled by default when you deploy to Azure Kubernetes Service.

Note: The model deployments created by Azure Machine Learning can be configured to use one of two authentication methods:

– key-based: A static key is used to authenticate to the web service.

– token-based: A temporary token must be obtained from the Azure Machine Learning workspace (using Azure Active Directory) and used to authenticate to the web service.

Incorrect:

Not C: Token authentication isn’t supported when you deploy to Azure Container Instances.

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-authenticate-web-service

NEW QUESTION 303

You have a Jupyter Notebook that contains Python code that is used to train a model. You must create a Python script for the production deployment. The solution must minimize code maintenance. Which two actions should you perform? (Each correct answer presents part of the solution. Choose two.)

A. Refactor the Jupyter Notebook code into functions.

B. Save each function to a separate Python file.

C. Define a main() function in the Python script.

D. Remove all comments and functions from the Python script.

Answer: AC

Explanation:

A: Refactoring, code style and testing. The first step is to modularise the notebook into a reasonable folder structure, this effectively means to convert files from .ipynb format to .py format, ensure each script has a clear distinct purpose and organise these files in a coherent way. Once the project is nicely structured we can tidy up or refactor the code.

C: Python main function is a starting point of any program. When the program is run, the python interpreter runs the code sequentially. Main function is executed only when it is run as a Python program.

https://www.guru99.com/learn-python-main-function-with-examples-understand-main.html

https://towardsdatascience.com/from-jupyter-notebook-to-deployment-a-straightforward-example-1838c203a437

NEW QUESTION 304

You train and register a machine learning model. You create a batch inference pipeline that uses the model to generate predictions from multiple data files. You must publish the batch inference pipeline as a service that can be scheduled to run every night. You need to select an appropriate compute target for the inference service. Which compute target should you use?

A. Azure Machine Learning compute instance.

B. Azure Machine Learning compute cluster.

C. Azure Kubernetes Service (AKS)-based inference cluster.

D. Azure Container Instance (ACI) compute target.

Answer: B

Explanation:

Azure Machine Learning compute clusters is used for Batch inference. Run batch scoring on serverless compute. Supports normal and low-priority VMs. No support for real-time inference.

https://docs.microsoft.com/en-us/azure/machine-learning/concept-compute-target

NEW QUESTION 305

You use the Azure Machine Learning designer to create and run a training pipeline. The pipeline must be run every night to inference predictions from a large volume of files. The folder where the files will be stored is defined as a dataset. You need to publish the pipeline as a REST service that can be used for the nightly inferencing run. What should you do?

A. Create a batch inference pipeline.

B. Set the compute target for the pipeline to an inference cluster.

C. Create a real-time inference pipeline.

D. Clone the pipeline.

Answer: A

Explanation:

Azure Machine Learning Batch Inference targets large inference jobs that are not time-sensitive. Batch Inference provides cost-effective inference compute scaling, with unparalleled throughput for asynchronous applications. It is optimized for high-throughput, fire-and-forget inference over large collections of data. You can submit a batch inference job by pipeline_run, or through REST calls with a published pipeline.

https://github.com/Azure/MachineLearningNotebooks/blob/master/how-to-use-azureml/machine-learning-pipelines/parallel-run/README.md

NEW QUESTION 306

You create a binary classification model. The model is registered in an Azure Machine Learning workspace. You use the Azure Machine Learning Fairness SDK to assess the model fairness. You develop a training script for the model on a local machine. You need to load the model fairness metrics into Azure Machine Learning studio. What should you do?

A. Implement the download_dashboard_by_upload_id function.

B. Implement the create_group_metric_set function.

C. Implement the upload_dashboard_dictionary function.

D. Upload the training script.

Answer: C

Explanation:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-machine-learning-fairness-aml

NEW QUESTION 307

You have a dataset that includes confidential data. You use the dataset to train a model. You must use a differential privacy parameter to keep the data of individuals safe and private. You need to reduce the effect of user data on aggregated results. What should you do?

A. Decrease the value of the epsilon parameter to reduce the amount of noise added to the data.

B. Increase the value of the epsilon parameter to decrease privacy and increase accuracy.

C. Decrease the value of the epsilon parameter to increase privacy and reduce accuracy.

D. Set the value of the epsilon parameter to 1 to ensure maximum privacy.

Answer: C

Explanation:

Differential privacy tries to protect against the possibility that a user can produce an indefinite number of reports to eventually reveal sensitive data. A value known as epsilon measures how noisy, or private, a report is. Epsilon has an inverse relationship to noise or privacy. The lower the epsilon, the more noisy (and private) the data is.

https://docs.microsoft.com/en-us/azure/machine-learning/concept-differential-privacy

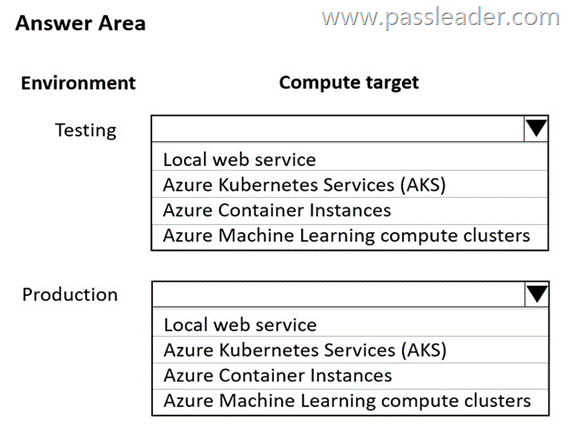

NEW QUESTION 308

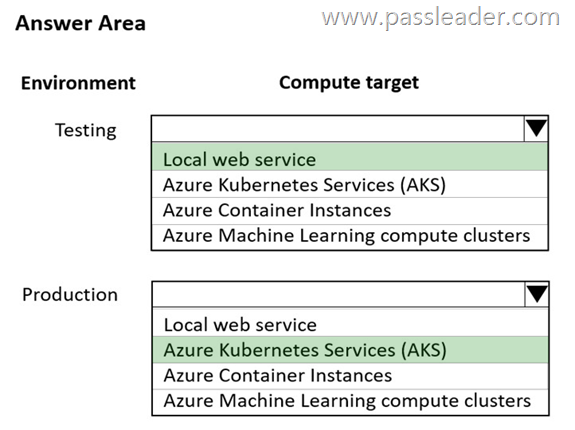

HotSpot

You are using an Azure Machine Learning workspace. You set up an environment for model testing and an environment for production. The compute target for testing must minimize cost and deployment efforts. The compute target for production must provide fast response time, autoscaling of the deployed service, and support real-time inferencing. You need to configure compute targets for model testing and production. Which compute targets should you use? (To answer, select the appropriate options in the answer area.)

Explanation:

Box 1: Local web service. The Local web service compute target is used for testing/debugging. Use it for limited testing and troubleshooting. Hardware acceleration depends on use of libraries in the local system.

Box 2: Azure Kubernetes Service (AKS). Azure Kubernetes Service (AKS) is used for Real-time inference. Recommended for production workloads. Use it for high-scale production deployments. Provides fast response time and autoscaling of the deployed service.

https://docs.microsoft.com/en-us/azure/machine-learning/concept-compute-target

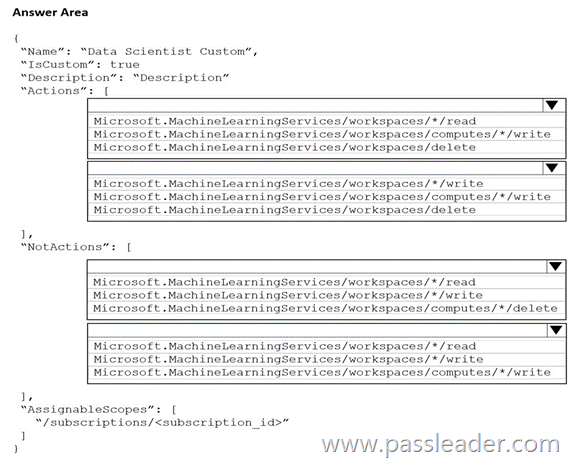

NEW QUESTION 309

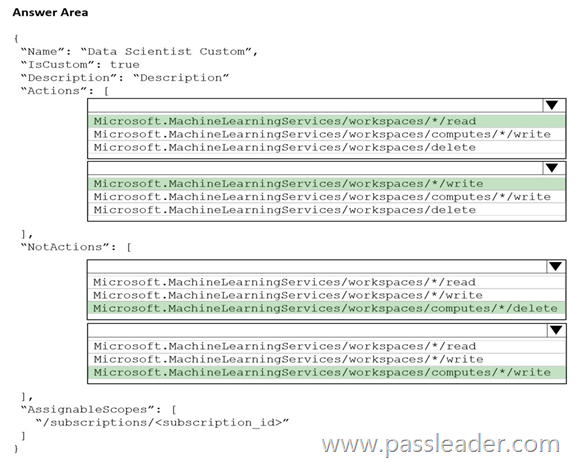

HotSpot

You are the owner of an Azure Machine Learning workspace. You must prevent the creation or deletion of compute resources by using a custom role. You must allow all other operations inside the workspace. You need to configure the custom role. How should you complete the configuration? (To answer, select the appropriate options in the answer area.)

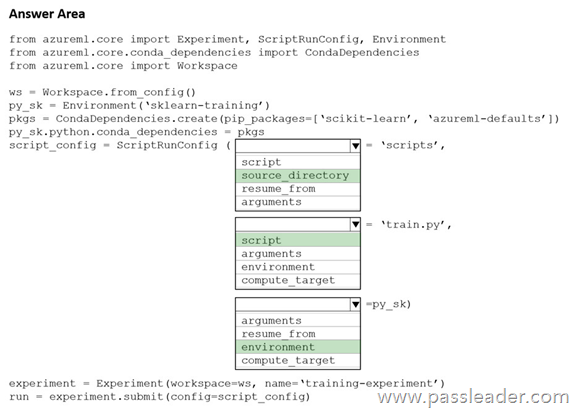

NEW QUESTION 310

HotSpot

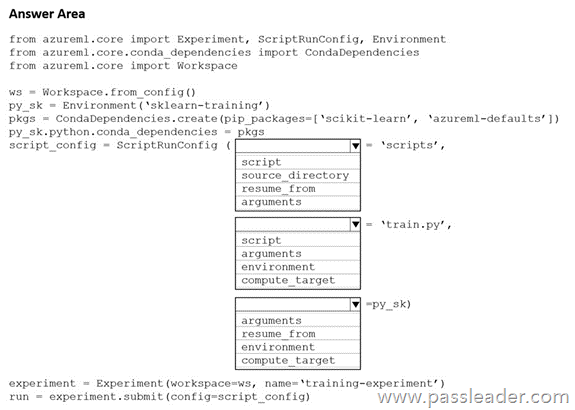

You create a Python script named train.py and save it in a folder named scripts. The script uses the scikit-learn framework to train a machine learning model. You must run the script as an Azure Machine Learning experiment on your local workstation. You need to write Python code to initiate an experiment that runs the train.py script. How should you complete the code segment? (To answer, select the appropriate options in the answer area.)

Explanation:

Box 1: source_directory. source_directory: A local directory containing code files needed for a run.

Box 2: script. Script: The file path relative to the source_directory of the script to be run.

Box 3: environment.

https://docs.microsoft.com/en-us/python/api/azureml-core/azureml.core.scriptrunconfig

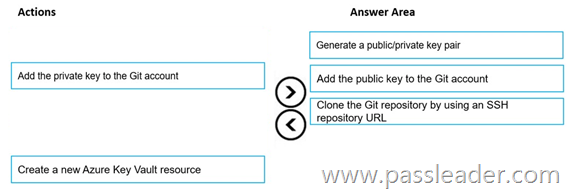

NEW QUESTION 311

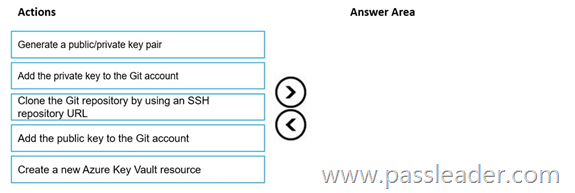

Drag and Drop

You are using a Git repository to track work in an Azure Machine Learning workspace. You need to authenticate a Git account by using SSH. Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Explanation:

https://docs.microsoft.com/en-us/azure/machine-learning/concept-train-model-git-integration

NEW QUESTION 312

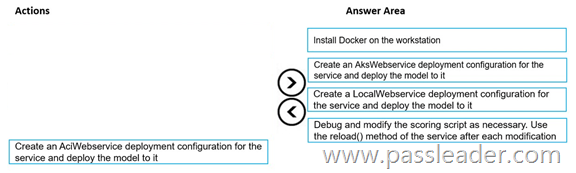

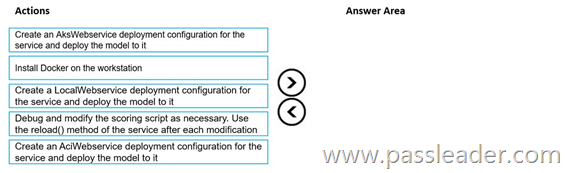

Drag and Drop

You train and register a model by using the Azure Machine Learning SDK on a local workstation. Python 3.6 and Visual Studio Code are installed on the workstation. When you try to deploy the model into production as an Azure Kubernetes Service (AKS)-based web service, you experience an error in the scoring script that causes deployment to fail. You need to debug the service on the local workstation before deploying the service to production. Which four actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Explanation:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-deploy-azure-kubernetes-service

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-troubleshoot-deployment-local

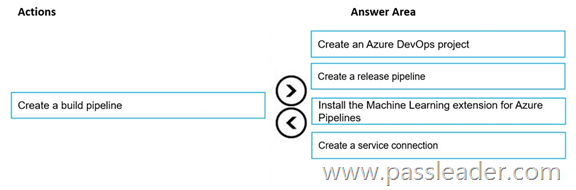

NEW QUESTION 313

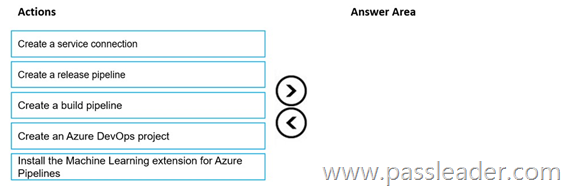

Drag and Drop

You create an Azure Machine Learning workspace and a new Azure DevOps organization. You register a model in the workspace and deploy the model to the target environment. All new versions of the model registered in the workspace must automatically be deployed to the target environment. You need to configure Azure Pipelines to deploy the model. Which four actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Explanation:

https://marketplace.visualstudio.com/items?itemName=ms-air-aiagility.vss-services-azureml

https://docs.microsoft.com/en-us/azure/devops/pipelines/targets/azure-machine-learning

NEW QUESTION 314

……

Get the newest PassLeader DP-100 VCE dumps here: https://www.passleader.com/dp-100.html (315 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-100 PDF dumps from Cloud Storage for free: https://drive.google.com/open?id=1f70QWrCCtvNby8oY6BYvrMS16IXuRiR2