Valid DP-300 Dumps shared by PassLeader for Helping Passing DP-300 Exam! PassLeader now offer the newest DP-300 VCE dumps and DP-300 PDF dumps, the PassLeader DP-300 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-300 dumps with VCE and PDF here: https://www.passleader.com/dp-300.html (147 Q&As Dumps –> 183 Q&As Dumps –> 204 Q&As Dumps –> 329 Q&As Dumps –> 374 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-300 dumps from Cloud Storage: https://drive.google.com/drive/folders/16H2cctBz9qiCdjfy4xFz728Vi_0nuYBl

NEW QUESTION 127

You have an Azure Data Lake Storage account that contains a staging zone. You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You use an Azure Data Factory schedule trigger to execute a pipeline that executes mapping data flow, and then inserts the data into the data warehouse.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

If you need to transform data in a way that is not supported by Data Factory, you can create a custom activity, not a mapping flow,5 with your own data processing logic and use the activity in the pipeline. You can create a custom activity to run R scripts on your HDInsight cluster with R installed.

https://docs.microsoft.com/en-US/azure/data-factory/transform-data

NEW QUESTION 128

You have an Azure Data Lake Storage account that contains a staging zone. You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You use an Azure Data Factory schedule trigger to execute a pipeline that executes an Azure Databricks notebook, and then inserts the data into the data warehouse.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

If you need to transform data in a way that is not supported by Data Factory, you can create a custom activity, not an Azure Databricks notebook, with your own data processing logic and use the activity in the pipeline. You can create a custom activity to run R scripts on your HDInsight cluster with R installed.

https://docs.microsoft.com/en-US/azure/data-factory/transform-data

NEW QUESTION 129

A company plans to use Apache Spark analytics to analyze intrusion detection data. You need to recommend a solution to analyze network and system activity data for malicious activities and policy violations. The solution must minimize administrative efforts. What should you recommend?

A. Azure Data Lake Storage

B. Azure Databricks

C. Azure HDInsight

D. Azure Data Factory

Answer: C

Explanation:

Azure HDInsight offers pre-made, monitoring dashboards in the form of solutions that can be used to monitor the workloads running on your clusters. There are solutions for Apache Spark, Hadoop, Apache Kafka, live long and process (LLAP), Apache HBase, and Apache Storm available in the Azure Marketplace. Note: With Azure HDInsight you can set up Azure Monitor alerts that will trigger when the value of a metric or the results of a query meet certain conditions. You can condition on a query returning a record with a value that is greater than or less than a certain threshold, or even on the number of results returned by a query. For example, you could create an alert to send an email if a Spark job fails or if a Kafka disk usage becomes over 90 percent full.

https://azure.microsoft.com/en-us/blog/monitoring-on-azure-hdinsight-part-4-workload-metrics-and-logs/

NEW QUESTION 130

You have an Azure data solution that contains an enterprise data warehouse in Azure Synapse Analytics named DW1. Several users execute adhoc queries to DW1 concurrently. You regularly perform automated data loads to DW1. You need to ensure that the automated data loads have enough memory available to complete quickly and successfully when the adhoc queries run. What should you do?

A. Assign a smaller resource class to the automated data load queries.

B. Create sampled statistics to every column in each table of DW1.

C. Assign a larger resource class to the automated data load queries.

D. Hash distribute the large fact tables in DW1 before performing the automated data loads.

Answer: C

Explanation:

The performance capacity of a query is determined by the user’s resource class. Smaller resource classes reduce the maximum memory per query, but increase concurrency. Larger resource classes increase the maximum memory per query, but reduce concurrency.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/resource-classes-for-workload-management

NEW QUESTION 131

You are monitoring an Azure Stream Analytics job. You discover that the Backlogged input Events metric is increasing slowly and is consistently non-zero. You need to ensure that the job can handle all the events. What should you do?

A. Remove any named consumer groups from the connection and use default.

B. Change the compatibility level of the Stream Analytics job.

C. Create an additional output stream for the existing input stream.

D. Increase the number of streaming units (SUs).

Answer: D

Explanation:

Backlogged Input Events: Number of input events that are backlogged. A non-zero value for this metric implies that your job isn’t able to keep up with the number of incoming events. If this value is slowly increasing or consistently non-zero, you should scale out your job, by increasing the SUs.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-monitoring

NEW QUESTION 132

You have an Azure Stream Analytics job. You need to ensure that the job has enough streaming units provisioned. You configure monitoring of the SU % Utilization metric. Which two additional metrics should you monitor? (Each correct answer presents part of the solution. Choose two.)

A. Late Input Events

B. Out of Order Events

C. Backlogged Input Events

D. Watermark Delay

E. Function Events

Answer: CD

Explanation:

To react to increased workloads and increase streaming units, consider setting an alert of 80% on the SU Utilization metric. Also, you can use watermark delay and backlogged events metrics to see if there is an impact. Note: Backlogged Input Events: Number of input events that are backlogged. A non-zero value for this metric implies that your job isn’t able to keep up with the number of incoming events. If this value is slowly increasing or consistently non-zero, you should scale out your job, by increasing the SUs.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-monitoring

NEW QUESTION 133

You have an Azure Databricks resource. You need to log actions that relate to changes in compute for the Databricks resource. Which Databricks services should you log?

A. clusters

B. jobs

C. DBFS

D. SSH

E. workspace

Answer: E

Explanation:

Databricks logging allows security and admin teams to demonstrate conformance to data governance standards within or from a Databricks workspace. Customers, especially in the regulated industries, also need records on activities like:

– User access control to cloud data storage.

– Cloud Identity and Access Management roles.

– User access to cloud network and compute.

Azure Databricks offers three distinct workloads on several VM Instances tailored for your data analytics workflow: the Jobs Compute and Jobs Light Compute workloads make it easy for data engineers to build and execute jobs, and the All-Purpose Compute workload makes it easy for data scientists to explore, visualize, manipulate, and share data and insights interactively.

https://databricks.com/blog/2020/03/25/trust-but-verify-with-databricks.html

NEW QUESTION 134

Your company uses Azure Stream Analytics to monitor devices. The company plans to double the number of devices that are monitored. You need to monitor a Stream Analytics job to ensure that there are enough processing resources to handle the additional load. Which metric should you monitor?

A. Input Deserialization Errors

B. Late Input Events

C. Early Input Events

D. Watermark Delay

Answer: D

Explanation:

The Watermark delay metric is computed as the wall clock time of the processing node minus the largest watermark it has seen so far. The watermark delay metric can rise due to:

1. Not enough processing resources in Stream Analytics to handle the volume of input events.

2. Not enough throughput within the input event brokers, so they are throttled.

3. Output sinks are not provisioned with enough capacity, so they are throttled.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-time-handling

NEW QUESTION 135

You manage an enterprise data warehouse in Azure Synapse Analytics. Users report slow performance when they run commonly used queries. Users do not report performance changes for infrequently used queries. You need to monitor resource utilization to determine the source of the performance issues. Which metric should you monitor?

A. Local tempdb percentage.

B. DWU percentage.

C. Data Warehouse Units (DWU) used.

D. Cache hit percentage.

Answer: A

Explanation:

Tempdb is used to hold intermediate results during query execution. High utilization of the tempdb database can lead to slow query performance. Note: If you have a query that is consuming a large amount of memory or have received an error message related to allocation of tempdb, it could be due to a very large CREATE TABLE AS SELECT (CTAS) or INSERT SELECT statement running that is failing in the final data movement operation.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-manage-monitor#monitor-tempdb

NEW QUESTION 136

You have an Azure Synapse Analytics dedicated SQL pool named Pool1 and a database named DB1. DB1 contains a fact table named Table. You need to identify the extent of the data skew in Table1. What should you do in Synapse Studio?

A. Connect to Pool1 and query sys.dm_pdw_nodes_db_partition_stats.

B. Connect to the built-in pool and run DBCC CHECKALLOC.

C. Connect to Pool1 and run DBCC CHECKALLOC.

D. Connect to the built-in pool and query sys.dm_pdw_nodes_db_partition_stats.

Answer: D

Explanation:

Use sys.dm_pdw_nodes_db_partition_stats to analyze any skewness in the data.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/cheat-sheet

NEW QUESTION 137

You are designing a dimension table in an Azure Synapse Analytics dedicated SQL pool. You need to create a surrogate key for the table. The solution must provide the fastest query performance. What should you use for the surrogate key?

A. an IDENTITY column

B. a GUID column

C. a sequence object

Answer: A

Explanation:

Dedicated SQL pool supports many, but not all, of the table features offered by other databases. Surrogate keys are not supported. Implement it with an Identity column.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-overview

NEW QUESTION 138

You are designing a star schema for a dataset that contains records of online orders. Each record includes an order date, an order due date, and an order ship date. You need to ensure that the design provides the fastest query times of the records when querying for arbitrary date ranges and aggregating by fiscal calendar attributes. Which two actions should you perform? (Each correct answer presents part of the solution. Choose two.)

A. Create a date dimension table that has a DateTime key.

B. Create a date dimension table that has an integer key in the format of YYYYMMDD.

C. Use built-in SQL functions to extract date attributes.

D. Use integer columns for the date fields.

E. Use DateTime columns for the date fields.

Answer: BD

Explanation:

https://community.idera.com/database-tools/blog/b/community_blog/posts/why-use-a-date-dimension-table-in-a-data-warehouse

NEW QUESTION 139

You need to trigger an Azure Data Factory pipeline when a file arrives in an Azure Data Lake Storage Gen2 container. Which resource provider should you enable?

A. Microsoft.EventHub

B. Microsoft.EventGrid

C. Microsoft.Sql

D. Microsoft.Automation

Answer: B

Explanation:

Event-driven architecture (EDA) is a common data integration pattern that involves production, detection, consumption, and reaction to events. Data integration scenarios often require Data Factory customers to trigger pipelines based on events happening in storage account, such as the arrival or deletion of a file in Azure Blob Storage account. Data Factory natively integrates with Azure Event Grid, which lets you trigger pipelines on such events.

https://docs.microsoft.com/en-us/azure/data-factory/how-to-create-event-trigger

NEW QUESTION 140

You have the following Azure Data Factory pipelines:

– Ingest Data from System1

– Ingest Data from System2

– Populate Dimensions

– Populate Facts

Ingest Data from System1 and Ingest Data from System2 have no dependencies. Populate Dimensions must execute after Ingest Data from System1 and Ingest Data from System2. Populate Facts must execute after the Populate Dimensions pipeline. All the pipelines must execute every eight hours. What should you do to schedule the pipelines for execution?

A. Add a schedule trigger to all four pipelines.

B. Add an event trigger to all four pipelines.

C. Create a parent pipeline that contains the four pipelines and use an event trigger.

D. Create a parent pipeline that contains the four pipelines and use a schedule trigger.

Answer: D

Explanation:

https://www.mssqltips.com/sqlservertip/6137/azure-data-factory-control-flow-activities-overview/

NEW QUESTION 141

You have an Azure Data Factory pipeline that performs an incremental load of source data to an Azure Data Lake Storage Gen2 account. Data to be loaded is identified by a column named LastUpdatedDate in the source table. You plan to execute the pipeline every four hours. You need to ensure that the pipeline execution meets the following requirements:

– Automatically retries the execution when the pipeline run fails due to concurrency or throttling limits.

– Supports backfilling existing data in the table.

Which type of trigger should you use?

A. tumbling window

B. on-demand

C. event

D. schedule

Answer: A

Explanation:

The Tumbling window trigger supports backfill scenarios. Pipeline runs can be scheduled for windows in the past.

Incorrect:

Not D: Schedule trigger does not support backfill scenarios. Pipeline runs can be executed only on time periods from the current time and the future.

https://docs.microsoft.com/en-us/azure/data-factory/concepts-pipeline-execution-triggers

NEW QUESTION 142

You have an Azure Data Factory that contains 10 pipelines. You need to label each pipeline with its main purpose of either ingest, transform, or load. The labels must be available for grouping and filtering when using the monitoring experience in Data Factory. What should you add to each pipeline?

A. an annotation

B. a resource tag

C. a run group ID

D. a user property

E. a correlation ID

Answer: A

Explanation:

Azure Data Factory annotations help you easily filter different Azure Data Factory objects based on a tag. You can define tags so you can see their performance or find errors faster.

https://www.techtalkcorner.com/monitor-azure-data-factory-annotations/

NEW QUESTION 143

You plan to perform batch processing in Azure Databricks once daily. Which type of Databricks cluster should you use?

A. automated

B. interactive

C. High Concurrency

Answer: A

Explanation:

Azure Databricks makes a distinction between all-purpose clusters and job clusters. You use all-purpose clusters to analyze data collaboratively using interactive notebooks. You use job clusters to run fast and robust automated jobs. The Azure Databricks job scheduler creates a job cluster when you run a job on a new job cluster and terminates the cluster when the job is complete.

https://docs.microsoft.com/en-us/azure/databricks/clusters

NEW QUESTION 144

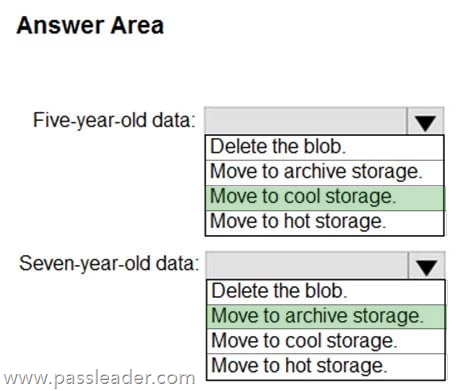

HotSpot

You have an Azure Data Lake Storage Gen2 container. Data is ingested into the container, and then transformed by a data integration application. The data is NOT modified after that. Users can read files in the container but cannot modify the files. You need to design a data archiving solution that meets the following requirements:

– New data is accessed frequently and must be available as quickly as possible.

– Data that is older than five years is accessed infrequently but must be available within one second when requested.

– Data that us older than seven years is NOT accessed. After seven years, the data must be persisted at the lowest cost possible.

– Costs must be minimized while maintaining the required availability.

How should you manage the data? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

Box 1: Move to cool storage. The cool access tier has lower storage costs and higher access costs compared to hot storage. This tier is intended for data that will remain in the cool tier for at least 30 days. Example usage scenarios for the cool access tier include:

– Short-term backup and disaster recovery.

– Older data not used frequently but expected to be available immediately when accessed Large data sets that need to be stored cost effectively, while more data is being gathered for future processing.

Note:

– Hot – Optimized for storing data that is accessed frequently.

– Cool – Optimized for storing data that is infrequently accessed and stored for at least 30 days.

– Archive – Optimized for storing data that is rarely accessed and stored for at least 180 days with flexible latency requirements, on the order of hours.

Box 2: Move to archive storage. Example usage scenarios for the archive access tier include:

– Long-term backup, secondary backup, and archival datasets.

– Original (raw) data that must be preserved, even after it has been processed into final usable form.

– Compliance and archival data that needs to be stored for a long time and is hardly ever accessed.

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-storage-tiers

NEW QUESTION 145

Drag and Drop

Your company analyzes images from security cameras and sends alerts to security teams that respond to unusual activity. The solution uses Azure Databricks. You need to send Apache Spark level events, Spark Structured Streaming metrics, and application metrics to Azure Monitor. Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions in the answer area and arrange them in the correct order.)

Answer:

Explanation:

Send application metrics using Dropwizard. Spark uses a configurable metrics system based on the Dropwizard Metrics Library. To send application metrics from Azure Databricks application code to Azure Monitor, follow these steps:

– Step 1: Configure your Azure Databricks cluster to use the Databricksmonitoring library. Prerequisite: Configure your Azure Databricks cluster to use the monitoring library.

– Step 2: Build the spark-listeners-loganalytics-1.0-SNAPSHOT.jar JAR file.

– Step 3: Create Dropwizard gauges or counters in your application code.

https://docs.microsoft.com/en-us/azure/architecture/databricks-monitoring/application-logs

NEW QUESTION 146

……

Get the newest PassLeader DP-300 VCE dumps here: https://www.passleader.com/dp-300.html (147 Q&As Dumps –> 183 Q&As Dumps –> 204 Q&As Dumps –> 329 Q&As Dumps –> 374 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-300 PDF dumps from Cloud Storage for free: https://drive.google.com/drive/folders/16H2cctBz9qiCdjfy4xFz728Vi_0nuYBl