Valid DP-203 Dumps shared by PassLeader for Helping Passing DP-203 Exam! PassLeader now offer the newest DP-203 VCE dumps and DP-203 PDF dumps, the PassLeader DP-203 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-203 dumps with VCE and PDF here: https://www.passleader.com/dp-203.html (100 Q&As Dumps –> 122 Q&As Dumps –> 155 Q&As Dumps –> 181 Q&As Dumps –> 222 Q&As Dumps –> 246 Q&As Dumps –> 397 Q&As Dumps –> 428 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-203 dumps from Cloud Storage: https://drive.google.com/drive/folders/1wVv0mD76twXncB9uqhbqcNPWhkOeJY0s

NEW QUESTION 81

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1. You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1. You plan to insert data from the files into Table1 and azure Data Lake Storage Gen2 container named container1. You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1. You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: In an Azure Synapse Analytics pipeline, you use a data flow that contains a Derived Column transformation.

Does this meet the goal?

A. Yes

B. No

Answer: B

NEW QUESTION 82

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1. You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1. You plan to insert data from the files into Table1 and azure Data Lake Storage Gen2 container named container1. You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1. You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: In an Azure Synapse Analytics pipeline, you use a Get Metadata activity that retrieves the DateTime of the files.

Does this meet the goal?

A. Yes

B. No

Answer: B

NEW QUESTION 83

You have an Azure Synapse Analystics dedicated SQL pool that contains a table named Contacts. Contacts contains a column named Phone. You need to ensure that users in a specific role only see the last four digits of a phone number when querying the Phone column. What should you include in the solution?

A. a default value

B. dynamic data masking

C. row-level security (RLS)

D. column encryption

E. table partitions

Answer: C

NEW QUESTION 84

You develop data engineering solutions for a company. A project requires the deployment of data to Azure Data Lake Storage. You need to implement role-based access control (RBAC) so that project members can manage the Azure Data Lake Storage resources. Which three actions should you perform? (Each correct answer presents part of the solution. Choose three.)

A. Assign Azure AD security groups to Azure Data Lake Storage.

B. Configure end-user authentication for the Azure Data Lake Storage account.

C. Configure service-to-service authentication for the Azure Data Lake Storage account.

D. Create security groups in Azure Active Directory (Azure AD) and add project members.

E. Configure access control lists (ACL) for the Azure Data Lake Storage account.

Answer: ADE

Explanation:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-secure-data

NEW QUESTION 85

You are designing an Azure Synapse Analytics dedicated SQL pool. You need to ensure that you can audit access to Personally Identifiable information (PII). What should you include in the solution?

A. dynamic data masking

B. row-level security (RLS)

C. sensitivity classifications

D. column-level security

Answer: D

NEW QUESTION 86

You have an Azure Data Lake Storage account that has a virtual network service endpoint configured. You plan to use Azure Data Factory to extract data from the Data Lake Storage account. The data will then be loaded to a data warehouse in Azure Synapse Analytics by using PolyBase. Which authentication method should you use to access Data Lake Storage?

A. shared access key authentication

B. managed identity authentication

C. account key authentication

D. service principal authentication

Answer: B

Explanation:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-sql-data-warehouse#use-polybase-to-load-data-into-azure-sql-data-warehouse

NEW QUESTION 87

What should you recommend to prevent users outside the Litware on-premises network from accessing the analytical data store?

A. a server-level virtual network rule

B. a database-level virtual network rule

C. a database-level firewall IP rule

D. a server-level firewall IP rule

Answer: A

Explanation:

Virtual network rules are one firewall security feature that controls whether the database server for your single databases and elastic pool in Azure SQL Database or for your databases in SQL Data Warehouse accepts communications that are sent from particular subnets in virtual networks. Server-level, not database-level: Each virtual network rule applies to your whole Azure SQL Database server, not just to one particular database on the server. In other words, virtual network rule applies at the serverlevel, not at the database-level.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-vnet-service-endpoint-rule-overview

NEW QUESTION 88

What should you recommend using to secure sensitive customer contact information?

A. data labels

B. column-level security

C. row-level security

D. Transparent Data Encryption (TDE)

Answer: B

Explanation:

Always Encrypted is a feature designed to protect sensitive data stored in specific database columns from access (for example, credit card numbers, national identification numbers, or data on a need to know basis). This includes database administrators or other privileged users who are authorized to access the database to perform management tasks, but have no business need to access the particular data in the encrypted columns. The data is always encrypted, which means the encrypted data is decrypted only for processing by client applications with access to the encryption key.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-security-overview

NEW QUESTION 89

You are designing a sales transactions table in an Azure Synapse Analytics dedicated SQL pool. The table will contains approximately 60 million rows per month and will be partitioned by month. The table will use a clustered column store index and round-robin distribution. Approximately. How many rows will there be for each combination of distribution and partition?

A. 1 million

B. 5 million

C. 20 million

D. 60 million

Answer: D

Explanation:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-partition

NEW QUESTION 90

You are designing a dimension table for a data warehouse. The table will track the value of the dimension attributes over time and preserve the history of the data by adding new rows as the data changes. Which type of slowly changing dimension (SCD) should use?

A. Type 0

B. Type 1

C. Type 2

D. Type 3

Answer: C

Explanation:

Type 2 – Creating a new additional record. In this methodology all history of dimension changes is kept in the database. You capture attribute change by adding a new row with a new surrogate key to the dimension table. Both the prior and new rows contain as attributes the natural key(or other durable identifier). Also ‘effective date’ and ‘current indicator’ columns are used in this method. There could be only one record with current indicator set to ‘Y’. For ‘effective date’ columns, i.e. start_date and end_date, the end_date for current record usually is set to value 9999-12-31. Introducing changes to the dimensional model in type 2 could be very expensive database operation so it is not recommended to use it in dimensions where a new attribute could be added in the future.

https://www.datawarehouse4u.info/SCD-Slowly-Changing-Dimensions.html

NEW QUESTION 91

You are designing an inventory updates table in an Azure Synapse Analytics dedicated SQL pool. The table will have a clustered columnstore index and will include the following columns:

– EventDate: 1 million per day.

– EventTypelD: 10 million per event type.

– WarehouselD: 100 million per warehouse.

– ProductCategoryTypeiD: 25 million per product category type.

You identify the following usage patterns:

– Analyst will most commonly analyze transactions for a warehouse.

– Queries will summarize by product category type, date, and/or inventory event type.

– You need to recommend a partition strategy for the table to minimize query times.

On which column should you recommend partitioning the table?

A. ProductCategoryTypeID

B. EventDate

C. WarehouseID

D. EventTypeID

Answer: D

NEW QUESTION 92

You plan to implement an Azure Data Lake Gen2 storage account. You need to ensure that the data lake will remain available if a data center fails in the primary Azure region. The solution must minimize costs. Which type of replication should you use for the storage account?

A. geo-redundant storage (GRS)

B. zone-redundant storage (ZRS)

C. locally-redundant storage (LRS)

D. geo-zone-redundant storage (GZRS)

Answer: A

Explanation:

Geo-redundant storage (GRS) copies your data synchronously three times within a single physical location in the primary region using LRS. It then copies your data asynchronously to a single physical location in the secondary region.

https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy

NEW QUESTION 93

You plan to ingest streaming social media data by using Azure Stream Analytics. The data will be stored in files in Azure Data Lake Storage, and then consumed by using Azure Datiabricks and PolyBase in Azure Synapse Analytics. You need to recommend a Stream Analytics data output format to ensure that the queries from Databricks and PolyBase against the files encounter the fewest possible errors. The solution must ensure that the tiles can be queried quickly and that the data type information is retained. What should you recommend?

A. Parquet

B. Avro

C. CSV

D. JSON

Answer: B

Explanation:

The Avro format is great for data and message preservation. Avro schema with its support for evolution is essential for making the data robust for streaming architectures like Kafka, and with the metadata that schema provides, you can reason on the data. Having a schema provides robustness in providing meta-data about the data stored in Avro records which are self-documenting the data.

http://cloudurable.com/blog/avro/index.html

NEW QUESTION 94

You have an Azure Data Lake Storage Gen2 container that contains 100 TB of data. You need to ensure that the data in the container is available for read workloads in a secondary region if an outage occurs in the primary region. The solution must minimize costs. Which type of data redundancy should you use?

A. zone-redundant storage (ZRS)

B. read-access geo-redundant storage (RA-GRS)

C. locally-redundant storage (LRS)

D. geo-redundant storage (GRS)

Answer: C

NEW QUESTION 95

You have an Azure Synapse Analytics dedicated SQL Pool1. Pool1 contains a partitioned fact table named dbo.Sales and a staging table named stg.Sales that has the matching table and partition definitions. You need to overwrite the content of the first partition in dbo.Sales with the content of the same partition in stg.Sales. The solution must minimize load times. What should you do?

A. Switch the first partition from dbo.Sales to stg.Sales.

B. Switch the first partition from stg.Sales to dbo. Sales.

C. Update dbo.Sales from stg.Sales.

D. Insert the data from stg.Sales into dbo.Sales.

Answer: D

NEW QUESTION 96

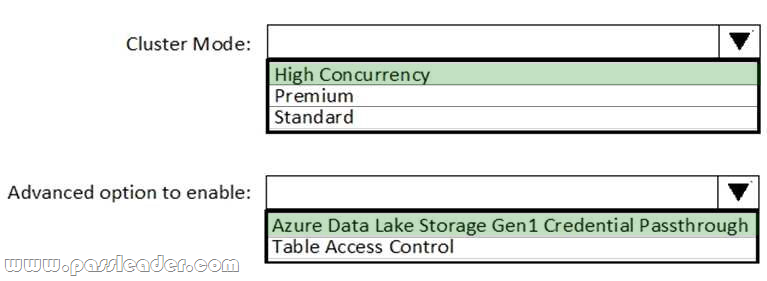

HotSpot

You need to implement an Azure Databricks cluster that automatically connects to Azure Data Lake Storage Gen2 by using Azure Active Directory (Azure AD) integration. How should you configure the new cluster? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

Box 1: High Concurrency. Enable Azure Data Lake Storage credential passthrough for a high-concurrency cluster.

Incorrect:

– Support for Azure Data Lake Storage credential passthrough on standard clusters is in Public Preview.

– Standard clusters with credential passthrough are supported on Databricks Runtime 5.5 and above and are limited to a single user.

Box 2: Azure Data Lake Storage Gen1 Credential Passthrough. You can authenticate automatically to Azure Data Lake Storage Gen1 and Azure Data Lake Storage Gen2 from Azure Databricks clusters using the same Azure Active Directory (Azure AD) identity that you use to log into Azure Databricks. When you enable your cluster for Azure Data Lake Storage credential passthrough, commands that you run on that cluster can read and write data in Azure Data Lake Storage without requiring you to configure service principal credentials for access to storage.

https://docs.azuredatabricks.net/spark/latest/data-sources/azure/adls-passthrough.html

NEW QUESTION 97

Drag and Drop

You plan to monitor an Azure data factory by using the Monitor & Manage app. You need to identify the status and duration of activities that reference a table in a source database. Which three actions should you perform in sequence? (To answer, move the actions from the list of actions to the answer are and arrange them in the correct order.)

Answer:

Explanation:

Step 1: From the Data Factory authoring UI, generate a user property for Source on all activities.

Step 2: From the Data Factory monitoring app, add the Source user property to Activity Runs table. You can promote any pipeline activity property as a user property so that it becomes an entity that you can monitor. For example, you can promote the Source and Destination properties of the copy activity in your pipeline as user properties. You can also select Auto Generate to generate the Source and Destination user properties for a copy activity.

Step 3: From the Data Factory authoring UI, publish the pipelines Publish output data to data stores such as Azure SQL Data Warehouse for business intelligence (BI) applications to consume.

https://docs.microsoft.com/en-us/azure/data-factory/monitor-visually

NEW QUESTION 98

……

Get the newest PassLeader DP-203 VCE dumps here: https://www.passleader.com/dp-203.html (100 Q&As Dumps –> 122 Q&As Dumps –> 155 Q&As Dumps –> 181 Q&As Dumps –> 222 Q&As Dumps –> 246 Q&As Dumps –> 397 Q&As Dumps –> 428 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-203 PDF dumps from Cloud Storage for free: https://drive.google.com/drive/folders/1wVv0mD76twXncB9uqhbqcNPWhkOeJY0s