Valid DP-201 Dumps shared by PassLeader for Helping Passing DP-201 Exam! PassLeader now offer the newest DP-201 VCE dumps and DP-201 PDF dumps, the PassLeader DP-201 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-201 dumps with VCE and PDF here: https://www.passleader.com/dp-201.html (179 Q&As Dumps –> 201 Q&As Dumps –> 223 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-201 dumps from Cloud Storage: https://drive.google.com/open?id=1VdzP5HksyU93Arqn65qPe5UFEm2Sxooh

NEW QUESTION 161

You design data engineering solutions for a company that has locations around the world. You plan to deploy a large set of data to Azure Cosmos DB. The data must be accessible from all company locations. You need to recommend a strategy for deploying the data that minimizes latency for data read operations and minimizes costs. What should you recommend?

A. Use a single Azure Cosmos DB account. Enable multi-region writes.

B. Use a single Azure Cosmos DB account Configure data replication.

C. Use multiple Azure Cosmos DB accounts. For each account, configure the location to the closest Azure datacenter.

D. Use a single Azure Cosmos DB account. Enable geo-redundancy.

E. Use multiple Azure Cosmos DB accounts. Enable multi-region writes.

Answer: A

Explanation:

https://docs.microsoft.com/en-us/azure/cosmos-db/high-availability

NEW QUESTION 162

You are designing security for administrative access to Azure SQL Data Warehouse. You need to recommend a solution to ensure that administrators use two-factor authentication when accessing the data warehouse from Microsoft SQL Server Management Studio (SSMS). What should you include in the recommendation?

A. Azure conditional access policies.

B. Azure Active Directory (Azure AD) Privileged Identity Management (PIM).

C. Azure Key Vault secrets.

D. Azure Active Directory (Azure AD) Identity Protection.

Answer: A

Explanation:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-conditional-access

NEW QUESTION 163

A company purchases IoT devices to monitor manufacturing machinery. The company uses an IoT appliance to communicate with the IoT devices. The company must be able to monitor the devices in real-time. You need to design the solution. What should you recommend?

A. Azure Data Factory instance using Azure PowerShell.

B. Azure Analysis Services using Microsoft Visual Studio.

C. Azure Stream Analytics cloud job using Azure PowerShell.

D. Azure Data Factory instance using Microsoft Visual Studio.

Answer: C

Explanation:

Stream Analytics is a cost-effective event processing engine that helps uncover real-time insights from devices, sensors, infrastructure, applications and data quickly and easily. Monitor and manage Stream Analytics resources with Azure PowerShell cmdlets and powershell scripting that execute basic Stream Analytics tasks.

https://cloudblogs.microsoft.com/sqlserver/2014/10/29/microsoft-adds-iot-streaming-analytics-data-production-and-workflow-services-to-azure/

NEW QUESTION 164

A company purchases IoT devices to monitor manufacturing machinery. The company uses an IoT appliance to communicate with the IoT devices. The company must be able to monitor the devices in real-time. You need to design the solution. What should you recommend?

A. Azure Data Factory instance using Azure PowerShell.

B. Azure Analysis Services using Microsoft Visual Studio.

C. Azure Stream Analytics Edge application using Microsoft Visual Studio.

D. Azure Analysis Services using Azure PowerShell.

Answer: C

Explanation:

Azure Stream Analytics (ASA) on IoT Edge empowers developers to deploy near-real-time analytical intelligence closer to IoT devices so that they can unlock the full value of device-generated data.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-edge

NEW QUESTION 165

You plan to ingest streaming social media data by using Azure Stream Analytics. The data will be stored in files in Azure Data Lake Storage, and then consumed by using Azure Databricks and PolyBase in Azure SQL Data Warehouse. You need to recommend a Stream Analytics data output format to ensure that the queries from Databricks and PolyBase against the files encounter the fewest possible errors. The solution must ensure that the files can be queried quickly and that the data type information is retained. What should you recommend?

A. Avro

B. CSV

C. Parquet

D. JSON

Answer: A

Explanation:

The Avro format is great for data and message preservation. Avro schema with its support for evolution is essential for making the data robust for streaming architectures like Kafka, and with the metadata that schema provides, you can reason on the data. Having a schema provides robustness in providing meta-data about the data stored in Avro records which are self- documenting the data.

http://cloudurable.com/blog/avro/index.html

NEW QUESTION 166

You have an Azure SQL database that has columns. The columns contain sensitive Personally Identifiable Information (PII) data. You need to design a solution that tracks and stores all the queries executed against the PII data. You must be able to review the data in Azure Monitor, and the data must be available for at least 45 days.

Solution: You add classifications to the columns that contain sensitive data. You turn on Auditing and set the audit log destination to use Azure Log Analytics.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

The default retention for Log Analytics is 31 days only. The Log Analytics retention settings allow you to configure a minimum of 31 days (if not using a free tier) up to 730 days. You would need to reconfigure to at least 45 days, or, for example, use Azure Blob Storage as destination.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-auditing

https://blogs.msdn.microsoft.com/canberrapfe/2017/01/25/change-oms-log-analytics-retention-period-in-the-azure-portal/

NEW QUESTION 167

You have an Azure SQL database that has columns. The columns contain sensitive Personally Identifiable Information (PII) data. You need to design a solution that tracks and stores all the queries executed against the PII data. You must be able to review the data in Azure Monitor, and the data must be available for at least 45 days.

Solution: You execute a daily stored procedure that retrieves queries from Query Store, looks up the column classifications, and stores the results in a new table in the database.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

Instead add classifications to the columns that contain sensitive data and turn on Auditing. Note: Auditing has been enhanced to log sensitivity classifications or labels of the actual data that were returned by the query. This would enable you to gain insights on who is accessing sensitive data.

https://azure.microsoft.com/en-us/blog/announcing-public-preview-of-data-discovery-classification-for-microsoft-azure-sql-data-warehouse/

NEW QUESTION 168

You have an Azure Storage account. You plan to copy one million image files to the storage account. You plan to share the files with an external partner organization. The partner organization will analyze the files during the next year. You need to recommend an external access solution for the storage account. The solution must meet the following requirements:

– Ensure that only the partner organization can access the storage account.

– Ensure that access of the partner organization is removed automatically after 365 days.

What should you include in the recommendation?

A. shared keys

B. Azure Blob storage lifecycle management policies

C. Azure policies

D. shared access signature (SAS)

Answer: D

Explanation:

A shared access signature (SAS) is a URI that grants restricted access rights to Azure Storage resources. You can provide a shared access signature to clients who should not be trusted with your storage account key but to whom you wish to delegate access to certain storage account resources. By distributing a shared access signature URI to these clients, you can grant them access to a resource for a specified period of time, with a specified set of permissions.

https://docs.microsoft.com/en-us/rest/api/storageservices/delegate-access-with-shared-access-signature

NEW QUESTION 169

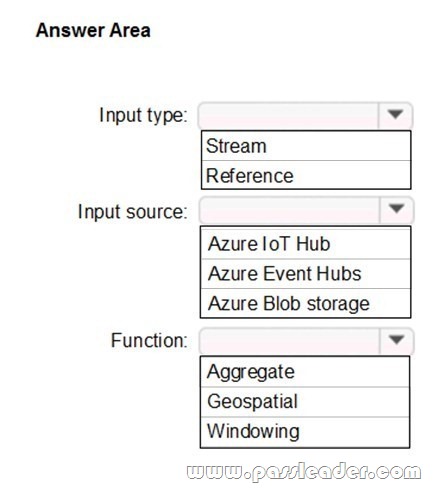

Hotspot

You plan to create a real-time monitoring app that alerts users when a device travels more than 200 meters away from a designated location. You need to design an Azure Stream Analytics job to process the data for the planned app. The solution must minimize the amount of code developed and the number of technologies used. What should you include in the Stream Analytics job? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

Input type: Stream. You can process real-time IoT data streams with Azure Stream Analytics.

Input source: Azure IoT Hub. In a real-world scenario, you could have hundreds of these sensors generating events as a stream. Ideally, a gateway device would run code to push these events to Azure Event Hubs or Azure IoT Hubs.

Function: Geospatial. With built-in geospatial functions, you can use Azure Stream Analytics to build applications for scenarios such as fleet management, ride sharing, connected cars, and asset tracking.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-get-started-with-azure-stream-analytics-to-process-data-from-iot-devices

https://docs.microsoft.com/en-us/azure/stream-analytics/geospatial-scenarios

NEW QUESTION 170

Hotspot

The following code segment is used to create an Azure Databricks cluster:

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Answer:

Explanation:

Automatically terminates the cluster after it is inactive for this time in minutes. If not set, this cluster will not be automatically terminated. If specified, the threshold must be between 10 and 10000 minutes. You can also set this value to 0 to explicitly disable automatic termination.

https://docs.databricks.com/dev-tools/api/latest/clusters.html

Case Study – Trey Research 2

NEW QUESTION 171

You need to design the solution for the government planning department. Which services should you include in the design?

A. Azure Synapse Analytics and Elastic Queries

B. Azure SQL Database and Polybase

C. Azure Synapse Analytics and Polybase

D. Azure SQL Database and Elastic Queries

Answer: C

Explanation:

PolyBase is a new feature in SQL Server 2016. It is used to query relational and non-relational databases (NoSQL) such as CSV files.

https://www.sqlshack.com/sql-server-2016-polybase-tutorial/

NEW QUESTION 172

You need to design the unauthorized data usage detection system. What Azure service should you include in the design?

A. Azure Analysis Services

B. Azure SQL Data Warehouse

C. Azure Databricks

D. Azure Data Factory

Answer: B

Explanation:

SQL threat detection identifies anomalous activities indicating unusual and potentially harmful attempts to access or exploit databases. Advanced Threat Protection for Azure SQL Database and SQL Data Warehouse detects anomalous activities indicating unusual and potentially harmful attempts to access or exploit databases.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-threat-detection-overview

NEW QUESTION 173

……

Case Study – Litware, Inc.

NEW QUESTION 176

Which Azure service should you recommend for the analytical data store so that the business analysts and data scientists can execute ad hoc queries as quickly as possible?

A. Azure Data Lake Storage Gen2

B. Azure Cosmos DB

C. Azure SQL Database

D. Azure Synapse Analytics

Answer: A

NEW QUESTION 177

What should you do to improve high availability of the real-time data processing solution?

A. Deploy identical Azure Stream Analytics jobs to paired regions in Azure.

B. Deploy a High Concurrency Databricks cluster.

C. Deploy an Azure Stream Analytics job and use an Azure Automation runbook to check the status of the job and to start the job if it stops.

D. Set Data Lake Storage to use geo-redundant storage (GRS).

Answer: A

Explanation:

Guarantee Stream Analytics job reliability during service updates Part of being a fully managed service is the capability to introduce new service functionality and improvements at a rapid pace. As a result, Stream Analytics can have a service update deploy on a weekly (or more frequent) basis. No matter how much testing is done there is still a risk that an existing, running job may break due to the introduction of a bug. If you are running mission critical jobs, these risks need to be avoided. You can reduce this risk by following Azure’s paired region model.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-job-reliability

NEW QUESTION 178

……

Get the newest PassLeader DP-201 VCE dumps here: https://www.passleader.com/dp-201.html (179 Q&As Dumps –> 201 Q&As Dumps –> 223 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-201 PDF dumps from Cloud Storage for free: https://drive.google.com/open?id=1VdzP5HksyU93Arqn65qPe5UFEm2Sxooh