Valid DP-200 Dumps shared by PassLeader for Helping Passing DP-200 Exam! PassLeader now offer the newest DP-200 VCE dumps and DP-200 PDF dumps, the PassLeader DP-200 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-200 dumps with VCE and PDF here: https://www.passleader.com/dp-200.html (241 Q&As Dumps –> 256 Q&As Dumps –> 272 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-200 dumps from Cloud Storage: https://drive.google.com/open?id=1CTHwJ44u5lT4tsb2qo8oThaQ5c_vwun1

NEW QUESTION 107

You are developing a data engineering solution for a company. The solution will store a large set of key- value pair data by using Microsoft Azure Cosmos DB. The solution has the following requirements:

– Data must be partitioned into multiple containers.

– Data containers must be configured separately.

– Data must be accessible from applications hosted around the world.

– The solution must minimize latency.

You need to provision Azure Cosmos DB.

A. Cosmos account-level throughput.

B. Provision an Azure Cosmos DB account with the Azure Table API. Enable geo-redundancy.

C. Configure table-level throughput.

D. Replicate the data globally by manually adding regions to the Azure Cosmos DB account.

E. Provision an Azure Cosmos DB account with the Azure Table API. Enable multi-region writes.

Answer: E

Explanation:

Scale read and write throughput globally. You can enable every region to be writable and elastically scale reads and writes all around the world. The throughput that your application configures on an Azure Cosmos database or a container is guaranteed to be delivered across all regions associated with your Azure Cosmos account. The provisioned throughput is guaranteed up by financially backed SLAs.

https://docs.microsoft.com/en-us/azure/cosmos-db/distribute-data-globally

NEW QUESTION 108

A company has a SaaS solution that uses Azure SQL Database with elastic pools. The solution will have a dedicated database for each customer organization. Customer organizations have peak usage at different periods during the year. Which two factors affect your costs when sizing the Azure SQL Database elastic pools? (Each correct answer presents a complete solution. Choose two.)

A. maximum data size

B. number of databases

C. eDTUs consumption

D. number of read operations

E. number of transactions

Answer: AC

Explanation:

A: With the vCore purchase model, in the General Purpose tier, you are charged for Premium blob storage that you provision for your database or elastic pool. Storage can be configured between 5 GB and 4 TB with 1 GB increments. Storage is priced at GB/month.

C: In the DTU purchase model, elastic pools are available in basic, standard and premium service tiers. Each tier is distinguished primarily by its overall performance, which is measured in elastic Database Transaction Units (eDTUs).

https://azure.microsoft.com/en-in/pricing/details/sql-database/elastic/

NEW QUESTION 109

A company runs Microsoft SQL Server in an on-premises virtual machine (VM). You must migrate the database to Azure SQL Database. You synchronize users from Active Directory to Azure Active Directory (Azure AD). You need to configure Azure SQL Database to use an Azure AD user as administrator. What should you configure?

A. For each Azure SQL Database, set the Access Control to administrator.

B. For each Azure SQL Database server, set the Active Directory to administrator.

C. For each Azure SQL Database, set the Active Directory administrator role.

D. For each Azure SQL Database server, set the Access Control to administrator.

Answer: C

Explanation:

There are two administrative accounts (Server admin and Active Directory admin) that act as administrators. One Azure Active Directory account, either an individual or security group account, can also be configured as an administrator. It is optional to configure an Azure AD administrator, but an Azure AD administrator must be configured if you want to use Azure AD accounts to connect to SQL Database.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-manage-logins

NEW QUESTION 110

You have an Azure SQL database named DB1 that contains a table named Table1. Table1 has a field named Customer_ID that is varchar(22). You need to implement masking for the Customer_ID field to meet the following requirements:

– The first two prefix characters must be exposed.

– The last four prefix characters must be exposed.

– All other characters must be masked.

Solution: You implement data masking and use a credit card function mask.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

Must use Custom Text data masking, which exposes the first and last characters and adds a custom padding string in the middle.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-dynamic-data-masking-get-started

NEW QUESTION 111

You have an Azure SQL database named DB1 that contains a table named Table1. Table1 has a field named Customer_ID that is varchar(22). You need to implement masking for the Customer_ID field to meet the following requirements:

– The first two prefix characters must be exposed.

– The last four prefix characters must be exposed.

– All other characters must be masked.

Solution: You implement data masking and use an email function mask.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

Must use Custom Text data masking, which exposes the first and last characters and adds a custom padding string in the middle.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-dynamic-data-masking-get-started

NEW QUESTION 112

You have an Azure SQL database named DB1 that contains a table named Table1. Table1 has a field named Customer_ID that is varchar(22). You need to implement masking for the Customer_ID field to meet the following requirements: The first two prefix characters must be exposed.

– The first two prefix characters must be exposed.

– The last four prefix characters must be exposed.

– All other characters must be masked.

Solution: You implement data masking and use a random number function mask.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

Must use Custom Text data masking, which exposes the first and last characters and adds a custom padding string in the middle.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-dynamic-data-masking-get-started

NEW QUESTION 113

You plan to create a dimension table in Azure Data Warehouse that will be less than 1 GB. You need to create the table to meet the following requirements:

– Provide the fastest query time.

– Minimize data movement.

Which type of table should you use?

A. hash distributed

B. heap

C. replicated

D. round-robin

Answer: D

Explanation:

Usually common dimension tables or tables that doesn’t distribute evenly are good candidates for round- robin distributed table. Dimension tables or other lookup tables in a schema can usually be stored as round-robin tables. Usually these tables connect to more than one fact tables and optimizing for one join may not be the best idea. Also usually dimension tables are smaller which can leave some distributions empty when hash distributed. Round-robin by definition guarantees a uniform data distribution.

https://blogs.msdn.microsoft.com/sqlcat/2015/08/11/choosing-hash-distributed-table-vs-round-robin-distributed-table-in-azure-sql-dw-service/

NEW QUESTION 114

You plan to implement an Azure Cosmos DB database that will write 100,000 JSON every 24 hours. The database will be replicated to three regions. Only one region will be writable. You need to select a consistency level for the database to meet the following requirements:

– Guarantee monotonic reads and writes within a session.

– Provide the fastest throughput.

– Provide the lowest latency.

Which consistency level should you select?

A. Strong

B. Bounded Staleness

C. Eventual

D. Session

E. Consistent Prefix

Answer: D

Explanation:

Session: Within a single client session reads are guaranteed to honor the consistent-prefix (assuming a single “writer” session), monotonic reads, monotonic writes, read-your-writes, and write-follows-reads guarantees. Clients outside of the session performing writes will see eventual consistency.

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

NEW QUESTION 115

You use Azure Stream Analytics to receive Twitter data from Azure Event Hubs and to output the data to an Azure Blob storage account. You need to output the count of tweets during the last five minutes every five minutes. Each tweet must only be counted once. Which windowing function should you use?

A. a five-minute Session window

B. a five-minute Sliding window

C. a five-minute Tumbling window

D. a five-minute Hopping window that has one-minute hop

Answer: C

Explanation:

Tumbling window functions are used to segment a data stream into distinct time segments and perform a function against them, such as the example below. The key differentiators of a Tumbling window are that they repeat, do not overlap, and an event cannot belong to more than one tumbling window.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-window-functions

NEW QUESTION 116

You are developing a solution that will stream to Azure Stream Analytics. The solution will have both streaming data and reference data. Which input type should you use for the reference data?

A. Azure Cosmos DB

B. Azure Event Hubs

C. Azure Blob storage

D. Azure IoT Hub

Answer: C

Explanation:

Stream Analytics supports Azure Blob storage and Azure SQL Database as the storage layer for Reference Data.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-use-reference-data

NEW QUESTION 117

You have an Azure Storage account and an Azure SQL data warehouse by using Azure Data Factory. The solution must meet the following requirements:

– Ensure that the data remains in the UK South region at all times.

– Minimize administrative effort.

Which type of integration runtime should you use?

A. Azure integration runtime

B. Self-hosted integration runtime

C. Azure-SSIS integration runtime

Answer: A

Explanation:

https://docs.microsoft.com/en-us/azure/data-factory/concepts-integration-runtime

NEW QUESTION 118

You plan to perform batch processing in Azure Databricks once daily. Which type of Databricks cluster should you use?

A. job

B. interactive

C. high concurrency

Answer: A

Explanation:

Example: Scheduled batch workloads (data engineers running ETL jobs) This scenario involves running batch job JARs and notebooks on a regular cadence through the Databricks platform.

The suggested best practice is to launch a new cluster for each run of critical jobs. This helps avoid any issues (failures, missing SLA, and so on) due to an existing workload (noisy neighbor) on a shared cluster.

Note: Azure Databricks has two types of clusters: interactive and automated. You use interactive clusters to analyze data collaboratively with interactive notebooks. You use automated clusters to run fast and robust automated jobs.

https://docs.databricks.com/administration-guide/cloud-configurations/aws/cmbp.html#scenario-3-scheduled-batch-workloads-data-engineers-running-etl-jobs

NEW QUESTION 119

You have an Azure SQL database that has masked columns. You need to identify when a user attempts to infer data from the masked columns. What should you use?

A. Azure Advanced Threat Protection (ATP)

B. custom masking rules

C. Transparent Data Encryption (TDE)

D. auditing

Answer: D

Explanation:

Dynamic Data Masking is designed to simplify application development by limiting data exposure in a set of pre-defined queries used by the application. While Dynamic Data Masking can also be useful to prevent accidental exposure of sensitive data when accessing a production database directly, it is important to note that unprivileged users with ad-hoc query permissions can apply techniques to gain access to the actual data. If there is a need to grant such ad-hoc access, Auditing should be used to monitor all database activity and mitigate this scenario.

https://docs.microsoft.com/en-us/sql/relational-databases/security/dynamic-data-masking

NEW QUESTION 120

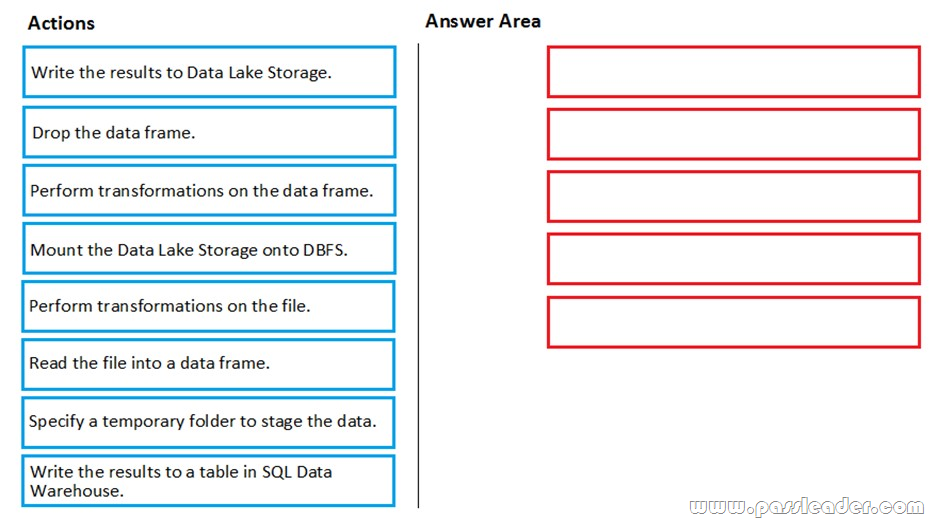

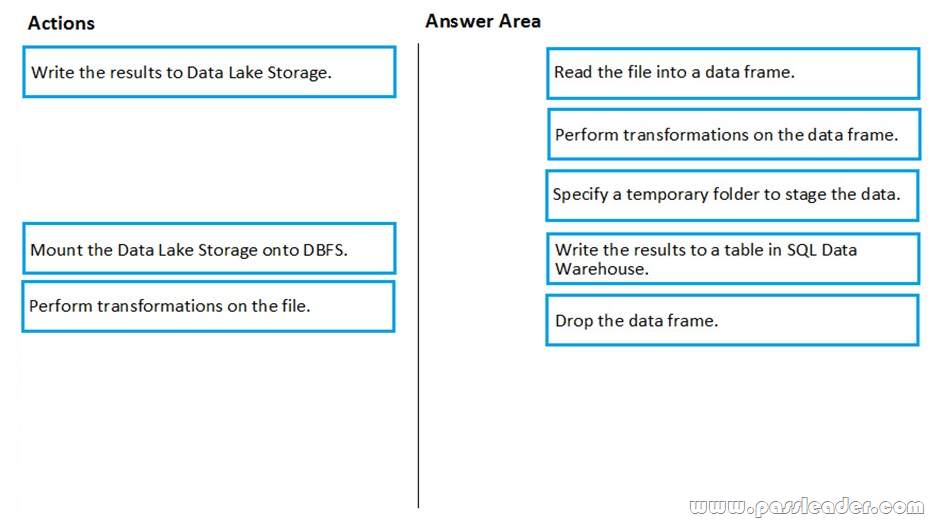

Drag and Drop

You have an Azure Data Lake Storage Gen2 account that contains JSON files for customers. The files contain two attributes named FirstName and LastName. You need to copy the data from the JSON files to an Azure SQL data Warehouse table by using Azure Databricks. A new column must be created that concatenates the FirstName and LastName values. You create the following components:

– A destination table in SQL Data Warehouse

– An Azure Blob storage container

– A service principal

Which five actions should you perform in sequence next in a Databricks notebook? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Answer:

Explanation:

Step 1: Read the file into a data frame. You can load the json files as a data frame in Azure Databricks.

Step 2: Perform transformations on the data frame.

Step 3:Specify a temporary folder to stage the data. Specify a temporary folder to use while moving data between Azure Databricks and Azure SQL Data Warehouse.

Step 4: Write the results to a table in SQL Data Warehouse. You upload the transformed data frame into Azure SQL Data Warehouse. You use the Azure SQL Data Warehouse connector for Azure Databricks to directly upload a dataframe as a table in a SQL data warehouse.

Step 5: Drop the data frame. Clean up resources. You can terminate the cluster. From the Azure Databricks workspace, select Clusters on the left. For the cluster to terminate, under Actions, point to the ellipsis (…) and select the Terminate icon.

https://docs.microsoft.com/en-us/azure/azure-databricks/databricks-extract-load-sql-data-warehouse

NEW QUESTION 121

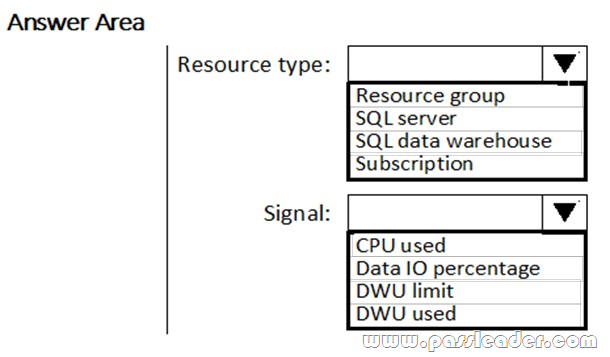

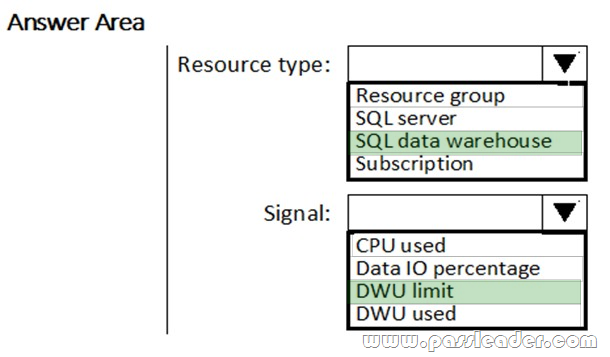

Hotspot

You need to receive an alert when Azure SQL Data Warehouse consumes the maximum allotted resources. Which resource type and signal should you use to create the alert in Azure Monitor? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

Resource type: SQL data warehouse. DWU limit belongs to the SQL data warehouse resource type.

Signal: DWU limit. SQL Data Warehouse capacity limits are maximum values allowed for various components of Azure SQL Data Warehouse.

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-insights-alerts-portal

NEW QUESTION 122

……

Get the newest PassLeader DP-200 VCE dumps here: https://www.passleader.com/dp-200.html (241 Q&As Dumps –> 256 Q&As Dumps –> 272 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-200 PDF dumps from Cloud Storage for free: https://drive.google.com/open?id=1CTHwJ44u5lT4tsb2qo8oThaQ5c_vwun1