Valid DP-100 Dumps shared by PassLeader for Helping Passing DP-100 Exam! PassLeader now offer the newest DP-100 VCE dumps and DP-100 PDF dumps, the PassLeader DP-100 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-100 dumps with VCE and PDF here: https://www.passleader.com/dp-100.html (239 Q&As Dumps –> 298 Q&As Dumps –> 315 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-100 dumps from Cloud Storage: https://drive.google.com/open?id=1f70QWrCCtvNby8oY6BYvrMS16IXuRiR2

NEW QUESTION 206

You train a machine learning model. You must deploy the model as a real-time inference service for testing. The service requires low CPU utilization and less than 48 MB of RAM. The compute target for the deployed service must initialize automatically while minimizing cost and administrative overhead. Which compute target should you use?

A. Azure Container Instance (ACI)

B. Attached Azure Databricks cluster

C. Azure Kubernetes Service (AKS) inference cluster

D. Azure Machine Learning compute cluster

Answer: A

Explanation:

Azure Container Instances (ACI) are suitable only for small models less than 1 GB in size. Use it for low-scale CPU-based workloads that require less than 48 GB of RAM. Note: Microsoft recommends using single-node Azure Kubernetes Service (AKS) clusters for dev-test of larger models.

https://docs.microsoft.com/id-id/azure/machine-learning/how-to-deploy-and-where

NEW QUESTION 207

You register a model that you plan to use in a batch inference pipeline. The batch inference pipeline must use a ParallelRunStep step to process files in a file dataset. The script has the ParallelRunStep step runs must process six input files each time the inferencing function is called. You need to configure the pipeline. Which configuration setting should you specify in the ParallelRunConfig object for the PrallelRunStep step?

A. process_count_per_node= “6”

B. node_count= “6”

C. mini_batch_size= “6”

D. error_threshold= “6”

Answer: B

Explanation:

node_count is the number of nodes in the compute target used for running the ParallelRunStep.

Incorrect:

Not A: process_count_per_node. Number of processes executed on each node. (optional, default value is number of cores on node.)

Not C: mini_batch_size. For FileDataset input, this field is the number of files user script can process in one run() call. For TabularDataset input, this field is the approximate size of data the user script can process in one run() call. Example values are 1024, 1024KB, 10MB, and 1GB.

Not D: error_threshold. The number of record failures for TabularDataset and file failures for FileDataset that should be ignored during processing. If the error count goes above this value, then the job will be aborted.

https://docs.microsoft.com/en-us/python/api/azureml-contrib-pipeline-steps/azureml.contrib.pipeline.steps.parallelrunconfig?view=azure-ml-py

NEW QUESTION 208

You deploy a real-time inference service for a trained model. The deployed model supports a business-critical application, and it is important to be able to monitor the data submitted to the web service and the predictions the data generates. You need to implement a monitoring solution for the deployed model using minimal administrative effort. What should you do?

A. View the explanations for the registered model in Azure ML studio.

B. Enable Azure Application Insights for the service endpoint and view logged data in the Azure portal.

C. View the log files generated by the experiment used to train the model.

D. Create an ML Flow tracking URI that references the endpoint, and view the data logged by ML Flow.

Answer: B

Explanation:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-enable-app-insights

NEW QUESTION 209

You create an Azure Machine Learning workspace. You are preparing a local Python environment on a laptop computer. You want to use the laptop to connect to the workspace and run experiments. You create the following config.json file:

{

“workspace_name” : “ml-workspace”

}

You must use the Azure Machine Learning SDK to interact with data and experiments in the workspace. You need to configure the config.json file to connect to the workspace from the Python environment. Which two additional parameters must you add to the config.json file in order to connect to the workspace? (Each correct answer presents part of the solution. Choose two.)

A. login

B. resource_group

C. subscription_id

D. key

E. region

Answer: BC

Explanation:

To use the same workspace in multiple environments, create a JSON configuration file. The configuration file saves your subscription (subscription_id), resource (resource_group), and workspace name so that it can be easily loaded.

https://docs.microsoft.com/en-us/python/api/azureml-core/azureml.core.workspace.workspace

NEW QUESTION 210

You create an Azure Machine Learning compute resource to train models. The compute resource is configured as follows:

– Minimum nodes: 2

– Maximum nodes: 4

You must decrease the minimum number of nodes and increase the maximum number of nodes to the following values:

– Minimum nodes: 0

– Maximum nodes: 8

You need to reconfigure the compute resource. What are three possible ways to achieve this goal? (Each correct answer presents a complete solution. Choose three.)

A. Use the Azure Machine Learning studio.

B. Run the update method of the AmlCompute class in the Python SDK.

C. Use the Azure portal.

D. Use the Azure Machine Learning designer.

E. Run the refresh_state() method of the BatchCompute class in the Python SDK.

Answer: ABC

Explanation:

A: You can manage assets and resources in the Azure Machine Learning studio.

B: The update(min_nodes=None, max_nodes=None, idle_seconds_before_scaledown=None) of the AmlCompute class updates the ScaleSettings for this AmlCompute target.

C: To change the nodes in the cluster, use the UI for your cluster in the Azure portal.

https://docs.microsoft.com/en-us/python/api/azureml-core/azureml.core.compute.amlcompute(class)

NEW QUESTION 211

You create a new Azure subscription. No resources are provisioned in the subscription. You need to create an Azure Machine Learning workspace. What are three possible ways to achieve this goal? (Each correct answer presents a complete solution. Choose three.)

A. Run Python code that uses the Azure ML SDK library and calls the Workspace.create method with name, subscription_id, resource_group, and location parameters.

B. Use an Azure Resource Management template that includes a Microsoft.MachineLearningServices/ workspaces resource and its dependencies.

C. Use the Azure Command Line Interface (CLI) with the Azure Machine Learning extension to call the az group create function with –name and –location parameters, and then the az ml workspace create function, specifying w and g parameters for the workspace name and resource group.

D. Navigate to Azure Machine Learning studio and create a workspace.

E. Run Python code that uses the Azure ML SDK library and calls the Workspace.get method with name, subscription_id, and resource_group parameters.

Answer: BCD

Explanation:

B: You can use an Azure Resource Manager template to create a workspace for Azure Machine Learning.

C: You can create a workspace for Azure Machine Learning with Azure CLI Install the machine learning extension.

D: You can create and manage Azure Machine Learning workspaces in the Azure portal.

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-workspace-template

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-manage-workspace-cli

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-manage-workspace

NEW QUESTION 212

An organization creates and deploys a multi-class image classification deep learning model that uses a set of labeled photographs. The software engineering team reports there is a heavy inferencing load for the prediction web services during the summer. The production web service for the model fails to meet demand despite having a fully- utilized compute cluster where the web service is deployed. You need to improve performance of the image classification web service with minimal downtime and minimal administrative effort. What should you advise the IT Operations team to do?

A. Create a new compute cluster by using larger VM sizes for the nodes, redeploy the web service to that cluster, and update the DNS registration for the service endpoint to point to the new cluster.

B. Increase the node count of the compute cluster where the web service is deployed.

C. Increase the minimum node count of the compute cluster where the web service is deployed.

D. Increase the VM size of nodes in the compute cluster where the web service is deployed.

Answer: B

Explanation:

The Azure Machine Learning SDK does not provide support scaling an AKS cluster. To scale the nodes in the cluster, use the UI for your AKS cluster in the Azure Machine Learning studio. You can only change the node count, not the VM size of the cluster.

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-attach-kubernetes

NEW QUESTION 213

You use Azure Machine Learning designer to create a real-time service endpoint. You have a single Azure Machine Learning service compute resource. You train the model and prepare the real-time pipeline for deployment. You need to publish the inference pipeline as a web service. Which compute type should you use?

A. a new Machine Learning Compute resource

B. Azure Kubernetes Services

C. HDInsight

D. the existing Machine Learning Compute resource

E. Azure Databricks

Answer: B

Explanation:

Azure Kubernetes Service (AKS) can be used real-time inference.

https://docs.microsoft.com/en-us/azure/machine-learning/concept-compute-target

NEW QUESTION 214

You plan to run a script as an experiment using a Script Run Configuration. The script uses modules from the scipy library as well as several Python packages that are not typically installed in a default conda environment. You plan to run the experiment on your local workstation for small datasets and scale out the experiment by running it on more powerful remote compute clusters for larger datasets. You need to ensure that the experiment runs successfully on local and remote compute with the least administrative effort. What should you do?

A. Do not specify an environment in the run configuration for the experiment. Run the experiment by using the default environment.

B. Create a virtual machine (VM) with the required Python configuration and attach the VM as a compute target. Use this compute target for all experiment runs.

C. Create and register an Environment that includes the required packages. Use this Environment for all experiment runs.

D. Create a config.yaml file defining the conda packages that are required and save the file in the experiment folder.

E. Always run the experiment with an Estimator by using the default packages.

Answer: C

Explanation:

If you have an existing Conda environment on your local computer, then you can use the service to create an environment object. By using this strategy, you can reuse your local interactive environment on remote runs.

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-use-environments

NEW QUESTION 215

You plan to use the Hyperdrive feature of Azure Machine Learning to determine the optimal hyperparameter values when training a model. You must use Hyperdrive to try combinations of the following hyperparameter values:

– learning_rate: any value between 0.001 and 0.1

– batch_size: 16, 32, or 64

You need to configure the search space for the Hyperdrive experiment. Which two parameter expressions should you use? (Each correct answer presents part of the solution. Choose two.)

A. a choice expression for learning_rate

B. a uniform expression for learning_rate

C. a normal expression for batch_size

D. a choice expression for batch_size

E. a uniform expression for batch_size

Answer: BD

Explanation:

B: Continuous hyperparameters are specified as a distribution over a continuous range of values.

D: Discrete hyperparameters are specified as a choice among discrete values.

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-tune-hyperparameters

NEW QUESTION 216

You train a classification model by using a logistic regression algorithm. You must be able to explain the model’s predictions by calculating the importance of each feature, both as an overall global relative importance value and as a measure of local importance for a specific set of predictions. You need to create an explainer that you can use to retrieve the required global and local feature importance values.

Solution: Create a MimicExplainer.

Does the solution meet the goal?

A. Yes

B. No

Answer: B

Explanation:

Instead use Permutation Feature Importance Explainer (PFI).

Note 1: Mimic explainer is based on the idea of training global surrogate models to mimic blackbox models. A global surrogate model is an intrinsically interpretable model that is trained to approximate the predictions of any black box model as accurately as possible. Data scientists can interpret the surrogate model to draw conclusions about the black box model.

Note 2: Permutation Feature Importance Explainer (PFI): Permutation Feature Importance is a technique used to explain classification and regression models. At a high level, the way it works is by randomly shuffling data one feature at a time for the entire dataset and calculating how much the performance metric of interest changes. The larger the change, the more important that feature is. PFI can explain the overall behavior of any underlying model but does not explain individual predictions.

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-machine-learning-interpretability

NEW QUESTION 217

You train a classification model by using a logistic regression algorithm. You must be able to explain the model’s predictions by calculating the importance of each feature, both as an overall global relative importance value and as a measure of local importance for a specific set of predictions. You need to create an explainer that you can use to retrieve the required global and local feature importance values.

Solution: Create a PFIExplainer.

Does the solution meet the goal?

A. Yes

B. No

Answer: A

Explanation:

Permutation Feature Importance Explainer (PFI): Permutation Feature Importance is a technique used to explain classification and regression models. At a high level, the way it works is by randomly shuffling data one feature at a time for the entire dataset and calculating how much the performance metric of interest changes. The larger the change, the more important that feature is. PFI can explain the overall behavior of any underlying model but does not explain individual predictions.

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-machine-learning-interpretability

NEW QUESTION 218

You create a machine learning model by using the Azure Machine Learning designer. You publish the model as a real-time service on an Azure Kubernetes Service (AKS) inference compute cluster. You make no change to the deployed endpoint configuration. You need to provide application developers with the information they need to consume the endpoint. Which two values should you provide to application developers? (Each correct answer presents part of the solution. Choose two.)

A. The name of the AKS cluster where the endpoint is hosted.

B. The name of the inference pipeline for the endpoint.

C. The URL of the endpoint.

D. The run ID of the inference pipeline experiment for the endpoint.

E. The key for the endpoint.

Answer: CE

Explanation:

Deploying an Azure Machine Learning model as a web service creates a REST API endpoint. You can send data to this endpoint and receive the prediction returned by the model. You create a web service when you deploy a model to your local environment, Azure Container Instances, Azure Kubernetes Service, or field-programmable gate arrays (FPGA). You retrieve the URI used to access the web service by using the Azure Machine Learning SDK. If authentication is enabled, you can also use the SDK to get the authentication keys or tokens.

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-consume-web-service

NEW QUESTION 219

You use Azure Machine Learning designer to create a training pipeline for a regression model. You need to prepare the pipeline for deployment as an endpoint that generates predictions asynchronously for a dataset of input data values. What should you do?

A. Clone the training pipeline.

B. Create a batch inference pipeline from the training pipeline.

C. Create a real-time inference pipeline from the training pipeline.

D. Replace the dataset in the training pipeline with an Enter Data Manually module.

Answer: C

Explanation:

You must first convert the training pipeline into a real-time inference pipeline. This process removes training modules and adds web service inputs and outputs to handle requests.

Incorrect:

Not A: Use the Enter Data Manually module to create a small dataset by typing values.

https://docs.microsoft.com/en-us/azure/machine-learning/tutorial-designer-automobile-price-deploy

https://docs.microsoft.com/en-us/azure/machine-learning/algorithm-module-reference/enter-data-manually

NEW QUESTION 220

You develop and train a machine learning model to predict fraudulent transactions for a hotel booking website. Traffic to the site varies considerably. The site experiences heavy traffic on Monday and Friday and much lower traffic on other days. Holidays are also high web traffic days. You need to deploy the model as an Azure Machine Learning real-time web service endpoint on compute that can dynamically scale up and down to support demand. Which deployment compute option should you use?

A. attached Azure Databricks cluster

B. Azure Container Instance (ACI)

C. Azure Kubernetes Service (AKS) inference cluster

D. Azure Machine Learning Compute Instance

E. attached virtual machine in a different region

Answer: D

Explanation:

Azure Machine Learning compute cluster is a managed-compute infrastructure that allows you to easily create a single or multi-node compute. The compute is created within your workspace region as a resource that can be shared with other users in your workspace. The compute scales up automatically when a job is submitted, and can be put in an Azure Virtual Network.

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-attach-compute-sdk

NEW QUESTION 221

You use the Azure Machine Learning SDK in a notebook to run an experiment using a script file in an experiment folder. The experiment fails. You need to troubleshoot the failed experiment. What are two possible ways to achieve this goal? (Each correct answer presents a complete solution. Choose two.)

A. Use the get_metrics() method of the run object to retrieve the experiment run logs.

B. Use the get_details_with_logs() method of the run object to display the experiment run logs.

C. View the log files for the experiment run in the experiment folder.

D. View the logs for the experiment run in Azure Machine Learning studio.

E. Use the get_output() method of the run object to retrieve the experiment run logs.

Answer: BD

Explanation:

Use get_details_with_logs() to fetch the run details and logs created by the run. You can monitor Azure Machine Learning runs and view their logs with the Azure Machine Learning studio.

Incorrect:

Not A: You can view the metrics of a trained model using run.get_metrics().

Not E: get_output() gets the output of the step as PipelineData.

https://docs.microsoft.com/en-us/python/api/azureml-pipeline-core/azureml.pipeline.core.steprun

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-monitor-view-training-logs

NEW QUESTION 222

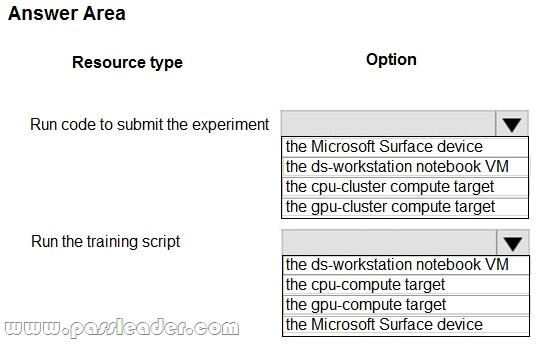

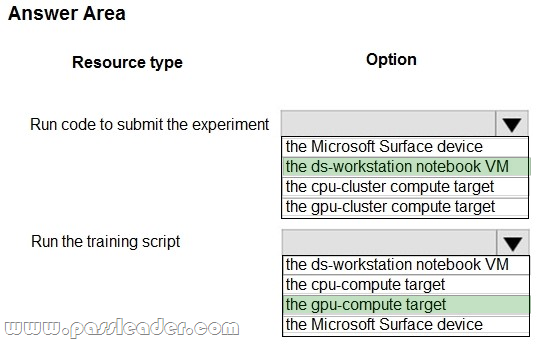

Hotspot

You are preparing to build a deep learning convolutional neural network model for image classification. You create a script to train the model using CUDA devices. You must submit an experiment that runs this script in the Azure Machine Learning workspace. The following compute resources are available:

– a Microsoft Surface device on which Microsoft Office has been installed. Corporate IT policies prevent the installation of additional software

– a Compute Instance named ds-workstation in the workspace with 2 CPUs and 8 GB of memory

– an Azure Machine Learning compute target named cpu-cluster with eight CPU-based nodes

– an Azure Machine Learning compute target named gpu-cluster with four CPU and GPU-based nodes

You need to specify the compute resources to be used for running the code to submit the experiment, and for running the script in order to minimize model training time. Which resources should the data scientist use? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

https://azure.microsoft.com/sv-se/blog/azure-machine-learning-service-now-supports-nvidia-s-rapids/

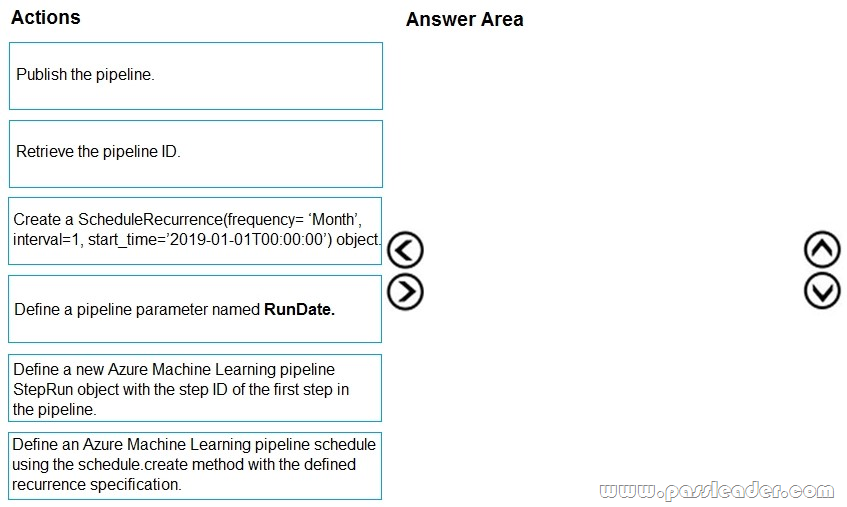

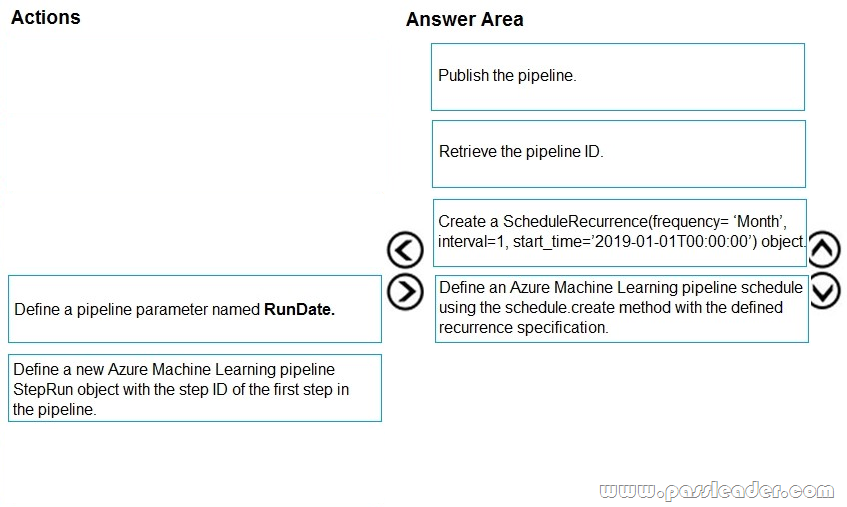

NEW QUESTION 223

Drag and Drop

You create a multi-class image classification deep learning model. The model must be retrained monthly with the new image data fetched from a public web portal. You create an Azure Machine Learning pipeline to fetch new data, standardize the size of images, and retrain the model. You need to use the Azure Machine Learning SDK to configure the schedule for the pipeline. Which four actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Answer:

Explanation:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-schedule-pipelines

NEW QUESTION 224

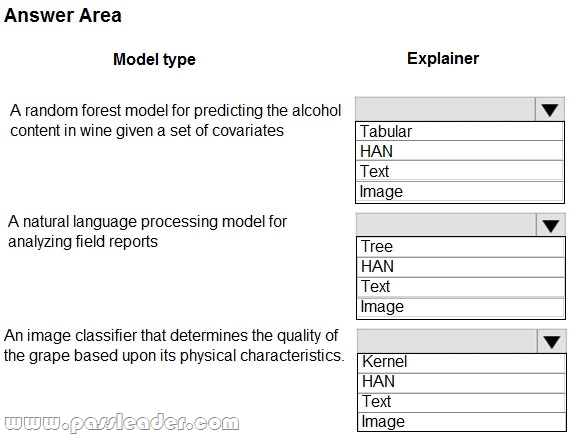

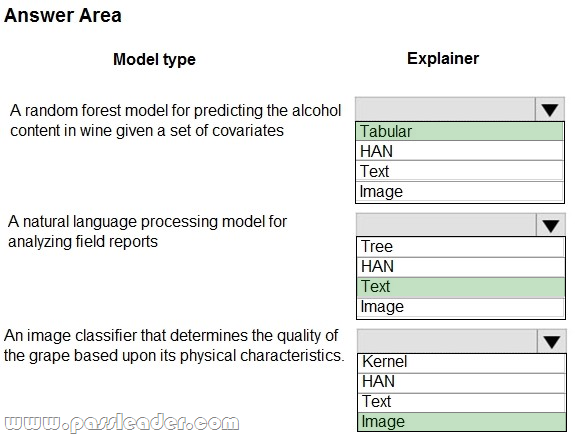

Hotspot

You are hired as a data scientist at a winery. The previous data scientist used Azure Machine Learning. You need to review the models and explain how each model makes decisions. Which explainer modules should you use? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

https://medium.com/microsoftazure/automated-and-interpretable-machine-learning-d0797574129

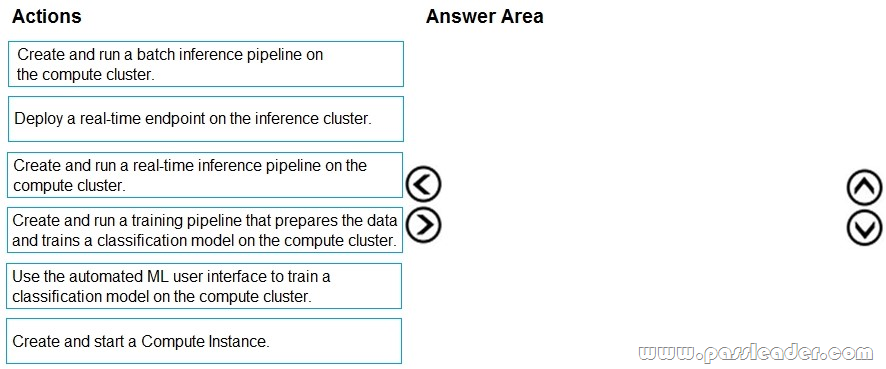

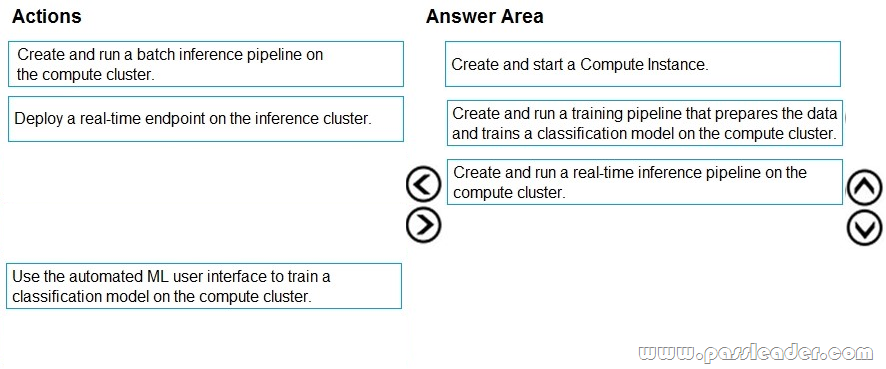

NEW QUESTION 225

Drag and Drop

You have an Azure Machine Learning workspace that contains a CPU-based compute cluster and an Azure Kubernetes Services (AKS) inference cluster. You create a tabular dataset containing data that you plan to use to create a classification model. You need to use the Azure Machine Learning designer to create a web service through which client applications can consume the classification model by submitting new data and getting an immediate prediction as a response. Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Answer:

Explanation:

https://docs.microsoft.com/en-us/learn/modules/create-classification-model-azure-machine-learning-designer/

NEW QUESTION 226

……

Get the newest PassLeader DP-100 VCE dumps here: https://www.passleader.com/dp-100.html (239 Q&As Dumps –> 298 Q&As Dumps –> 315 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-100 PDF dumps from Cloud Storage for free: https://drive.google.com/open?id=1f70QWrCCtvNby8oY6BYvrMS16IXuRiR2