Valid DP-500 Dumps shared by PassLeader for Helping Passing DP-500 Exam! PassLeader now offer the newest DP-500 VCE dumps and DP-500 PDF dumps, the PassLeader DP-500 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-500 dumps with VCE and PDF here: https://www.passleader.com/dp-500.html (145 Q&As Dumps –> 193 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-500 dumps from Cloud Storage: https://drive.google.com/drive/folders/1EDghX2xzyoR_nUrbUD5C2Ow5sBLIe-gh

NEW QUESTION 111

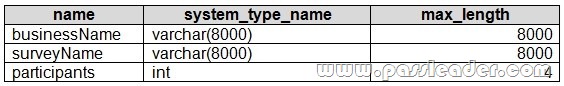

You are using an Azure Synapse Analytics serverless SQL pool to query a collection of Apache Parquet files by using automatic schema inference. The files contain more than 40 million rows of UTF-8-encoded business names, survey names, and participant counts. The database is configured to use the default collation. The queries use OPENROWSET and infer the schema shown in the following table:

You need to recommend changes to the queries to reduce I/O reads and tempdb usage.

Solution: You recommend defining an external table for the Parquet files and updating the query to use the table.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

You are using an Azure Synapse Analytics serverless SQL pool to query a collection of Apache Parquet files by using automatic schema inference. So, a View would do the trick, not an Ext Table.

NEW QUESTION 112

From Power Query Editor, you profile the data shown in the following exhibit:

The IoT GUID and IoT ID columns are unique to each row in the query. You need to analyze IoT events by the hour and day of the year. The solution must improve dataset performance.

Solution: You split the IoT DateTime column into a column named Date and a column named Time.

Does this meet the goal?

A. Yes

B. No

Answer: A

Explanation:

https://powerbi.microsoft.com/fr-be/blog/best-practice-rules-to-improve-your-models-performance/

NEW QUESTION 113

You have a Power BI tenant. You plan to register the tenant in an Azure Purview account. You need to ensure that you can scan the tenant by using Azure Purview. Which two actions should you perform? (Each correct answer presents part of the solution. Choose two.)

A. From the Microsoft 365 admin center, create a Microsoft 365 group.

B. From the Power BI Admin center, set Allow live connections to Enabled.

C. From the Power BI Admin center, set Allow service principals to use read-only Power BI admin APIs to Enabled.

D. From the Azure Active Directory admin center, create a security group.

E. From the Power BI Admin center, set Share content with external users to Enabled.

Answer: CD

Explanation:

https://learn.microsoft.com/en-us/azure/purview/register-scan-power-bi-tenant?tabs=Scenario1

NEW QUESTION 114

You have a deployment pipeline for a Power BI workspace. The workspace contains two datasets that use import storage mode. A database administrator reports a drastic increase in the number of queries sent from the Power BI service to an Azure SQL database since the creation of the deployment pipeline. An investigation into the issue identifies the following:

– One of the datasets is larger than 1 GB and has a fact table that contains more than 500 million rows.

– When publishing dataset changes to development, test, or production pipelines, a refresh is triggered against the entire dataset.

You need to recommend a solution to reduce the size of the queries sent to the database when the dataset changes are published to development, test, or production. What should you recommend?

A. Turn off auto refresh when publishing the dataset changes to the Power BI service.

B. In the dataset, change the fact table from an import table to a hybrid table.

C. Enable the large dataset storage format for workspace.

D. Create a dataset parameter to reduce the fact table row count in the development and test pipelines.

Answer: B

Explanation:

By changing the fact table from an import table to a hybrid table, you can leverage the benefits of DirectQuery storage mode for the fact table. With DirectQuery, the data remains in the Azure SQL database, and Power BI sends queries directly to the database when users interact with the report. This approach can significantly reduce the amount of data transferred between Power BI and the Azure SQL database.

NEW QUESTION 115

You have a Power BI Premium capacity. You need to increase the number of virtual cores associated to the capacity. Which role do you need?

A. Power BI workspace admin.

B. Capacity admin.

C. Power Platform admin.

D. Power BI admin.

Answer: D

Explanation:

Power BI admins and global administrators can change Power BI Premium capacity. Capacity admins who are not a Power BI admin or global administrator don’t have this option.

https://learn.microsoft.com/en-us/power-bi/enterprise/service-admin-premium-manage

NEW QUESTION 116

You are attempting to configure certification for a Power BI dataset and discover that the certification setting for the dataset is unavailable. What are two possible causes of the issue? (Each correct answer presents a complete solution. Choose two.)

A. The workspace is in shared capacity.

B. You have insufficient permissions.

C. Dataset certification is disabled for the Power BI tenant.

D. The sensitivity level for the dataset is set to Highly Confidential.

E. Row-level security (RLS) is missing from the dataset.

Answer: BC

Explanation:

https://learn.microsoft.com/en-us/power-bi/admin/service-admin-setup-certification

NEW QUESTION 117

Your company is migrating its current, custom-built reporting solution to Power BI. The Power BI tenant must support the following scenarios:

– 40 reports that will be embedded in external websites. The websites control their own security.

– The reports will be consumed by 50 users monthly.

– Forty-five users that require access to the workspaces and apps in the Power BI Admin portal. Ten of the users must publish and consume datasets that are larger than 1 GB.

– Ten developers that require Text Analytics transformations and paginated reports for datasets.

– An additional 15 users will consume the reports.

You need to recommend a licensing solution for the company. The solution must minimize costs. Which two Power BI license options should you include in the recommendation? (Each correct answer presents part of the solution. Choose two.)

A. 70 Premium per user

B. one Premium

C. 70 Pro

D. one Embedded

E. 35 Pro

F. 35 Premium per user

Answer: BE

Explanation:

Since we need 45 users to have access to the workspaces and apps, 35 Premium Per user would not work because for the Premium Per User workspace content to be accessed we need that all users have Premium Per User. So even if we go for Premium Per User, then we need to purchase it for 70 users.

Also, since we want to publish reports to web and not embed to the app, I would not consider Embedded capacity. Another important thing is to minimize the cost. We can use Premium capacity for publishing the reports to web, supporting Text Analytics transformations and paginated reports and having dataset more than 1GB. For the users who will be actively busy with development we can purchase 35 pro licenses (IMO 20 pro account is enough).

NEW QUESTION 118

You have two Power BI reports named Report1 and Report2. Report1 connects to a shared dataset named Dataset1. Report2 connects to a local dataset that has the same structure as Dataset1. Report2 contains several calculated tables and parameters. You need to prepare Report2 to use Dataset1. Which two actions should you perform? (Each correct answer presents part of the solution. Choose two.)

A. Remove the data source permissions.

B. Delete all the Power Query Editor objects.

C. Modify the source of each query.

D. Update all the parameter values.

E. Delete all the calculated tables.

Answer: CD

Explanation:

– Modify the source of each query. You need to modify the source of each query in Report2 to use Dataset1 as the data source, instead of the local dataset. This will ensure that Report2 uses the data from Dataset1, rather than the data from the local dataset.

– Calculated columns absolutely can work if the dataset has the same structure meaning same column names and data types. The calculation will work on the dataset 1 data just as it worked on dataset2 if al elements of the formula for the calculated column stay the same.

NEW QUESTION 119

You use an Apache Spark notebook in Azure Synapse Analytics to filter and transform data. You need to review statistics for a DataFrame that includes:

– The column name.

– The column type.

– The number of distinct values.

– Whether the column has missing values.

Which function should you use?

A. displayHTML()

B. display(df, summary=true)

C. %%configure

D. display(df)

E. %%lsmagic

Answer: B

Explanation:

https://learn.microsoft.com/en-us/azure/synapse-analytics/spark/apache-spark-data-visualization

NEW QUESTION 120

You have a group of data scientists who must create machine learning models and run periodic experiments on a large dataset. You need to recommend an Azure Synapse Analytics pool for the data scientists. The solution must minimize costs. Which type of pool should you recommend?

A. a Data Explorer pool

B. an Apache Spark pool

C. a dedicated SQL pool

D. a serverless SQL pool

Answer: B

Explanation:

Machine learning models can be trained with help from various algorithms and libraries. Spark MLlib offers scalable machine learning algorithms that can help solving most classical machine learning problems.

https://learn.microsoft.com/en-us/azure/synapse-analytics/machine-learning/what-is-machine-learning

NEW QUESTION 121

You have a deployment pipeline for a Power BI workspace. The workspace contains two datasets that use import storage mode. A database administrator reports a drastic increase in the number of queries sent from the Power BI service to an Azure SQL database since the creation of the deployment pipeline. An investigation into the issue identifies the following:

– One of the datasets is larger than 1 GB and has a fact table that contains more than 500 million rows.

– When publishing dataset changes to development, test, or production pipelines, a refresh is triggered against the entire dataset.

You need to recommend a solution to reduce the size of the queries sent to the database when the dataset changes are published to development, test, or production. What should you recommend?

A. From Capacity settings in the Power BI Admin portal, reduce the Max Intermediate Row Set Count setting.

B. Configure the dataset to use a composite model that has a DirectQuery connection to the fact table.

C. Enable the large dataset storage format for workspace.

D. From Capacity settings in the Power BI Admin portal, increase the Max Intermediate Row Set Count setting.

Answer: B

Explanation:

https://learn.microsoft.com/en-us/power-bi/guidance/import-modeling-data-reduction#switch-to-mixed-mode

NEW QUESTION 122

You are using a Python notebook in an Apache Spark pool in Azure Synapse Analytics. You need to present the data distribution statistics from a DataFrame in a tabular view. Which method should you invoke on the DataFrame?

A. sample

B. describe

C. freqItems

D. explain

Answer: B

Explanation:

It should definitely be summary or describe, either works. Summary shows count, mean, stddev, min, max and quartiles. Describe shows count, mean, stddev, min and max. The differences seem to be that summary is newer and includes the percentiles at 25%, 50% and 75%.

https://spark.apache.org/docs/3.1.2/api/python/reference/api/pyspark.sql.DataFrame.summary.html

https://spark.apache.org/docs/3.1.2/api/python/reference/api/pyspark.sql.DataFrame.describe.html#pyspark.sql.DataFrame.describe

NEW QUESTION 123

You have a deployment pipeline for a Power BI workspace. The workspace contains two datasets that use import storage mode. A database administrator reports a drastic increase in the number of queries sent from the Power Bi service to an Azure SQL database since the creation of the deployment pipeline. An investigation into the issue identifies the following:

– One of the datasets is larger than 1 GB and has a fact table that contains more than 500 million rows.

– When publishing dataset changes to development, test, or production pipelines, a refresh is triggered against the entire dataset.

You need to recommend a solution to reduce the size of the queries sent to the database when the dataset changes are published to development, test, or production. What should you recommend?

A. Request the authors of the deployment pipeline datasets to reduce the number of datasets republished during development.

B. In the dataset, delete the fact table.

C. Configure the dataset to use a composite model that has a DirectQuery connection to the fact table.

D. From Capacity settings in the Power Bi Admin portal, reduce the Max Intermediate Row Set Count setting.

Answer: C

NEW QUESTION 124

You are using a Python notebook in an Apache Spark pool in Azure Synapse Analytics. You need to present the data distribution statistics from a DataFrame in a tabular view. Which method should you invoke on the DataFrame?

A. freqItems

B. corr

C. summary

D. rollup

Answer: C

Explanation:

It should definitely be summary or describe, either works. Summary shows count, mean, stddev, min, max and quartiles. Describe shows count, mean, stddev, min and max. The differences seem to be that summary is newer and includes the percentiles at 25%, 50% and 75%.

https://spark.apache.org/docs/3.1.2/api/python/reference/api/pyspark.sql.DataFrame.summary.html

https://spark.apache.org/docs/3.1.2/api/python/reference/api/pyspark.sql.DataFrame.describe.html#pyspark.sql.DataFrame.describe

NEW QUESTION 125

You have a deployment pipeline for a Power BI workspace. The workspace contains two datasets that use Import storage mode. A database administrator reports a drastic increase in the number of queries sent from the Power BI service to an Azure SQL database since the creation of the deployment pipeline. An investigation into the issue identifies the following:

– One of the datasets is larger than 1 GB and has a fact table that contains more than 500 million rows.

– When publishing dataset changes to development, test, or production pipelines, a refresh is triggered against the entire dataset.

You need to recommend a solution to reduce the size of the queries sent to the database when the dataset changes are published to development, test, or production. What should you recommend?

A. Enable the large dataset storage format for workspace.

B. Create a dataset parameter to reduce the fact table row count in the development and test pipelines.

C. Request the authors of the deployment pipeline datasets to reduce the number of datasets republished during development.

D. Turn off auto refresh when publishing the dataset changes to the Power BI service.

Answer: B

NEW QUESTION 126

You have a Power BI report that contains a bar chart. The bar chart displays sales by country. You need to ensure that a summary of the data shown on the bar chart is accessible by using a screen reader. What should you configure on the bar chart?

A. conditional formatting

B. the layer order

C. alt text

D. the tab order

Answer: C

NEW QUESTION 127

You have an Azure Synapse Analytics dedicated SQL pool and a Microsoft Purview account. The Microsoft Purview account has been granted sufficient permissions to the dedicated SQL pool. You need to ensure that the SQL pool is scanned by Microsoft Purview. What should you do first?

A. Create a data policy.

B. Search the data catalog.

C. Register a data source.

D. Create a data share connection.

Answer: C

Explanation:

After data sources are registered in your Microsoft Purview account, the next step is to scan the data sources. The scanning process establishes a connection to the data source and captures technical metadata like names, file size, columns, and so on.

https://learn.microsoft.com/en-us/purview/concept-scans-and-ingestion

NEW QUESTION 128

You have a Power BI tenant. You need to ensure that all reports use a consistent set of colors and fonts. The solution must ensure that the colors and fonts can be applied to existing reports. What should you create?

A. a report theme file

B. a PBIX file

C. a Power BI template

Answer: A

NEW QUESTION 129

You need to use Power BI to ingest data from an API. The API requires that an API key be passed in the headers of the request. Which type of authentication should you use?

A. Organizational Account

B. Basic

C. Web API

D. Anonymous

Answer: C

Explanation:

Web API authentication is a type of authentication that uses an API key to authenticate requests to an API. The API key is typically passed in the headers of the request. Organizational account authentication is used to authenticate users to Power BI itself. Basic authentication is a simple type of authentication that uses a username and password to authenticate requests. Anonymous authentication does not require any authentication. To ingest data from an API that requires an API key to be passed in the headers of the request, you should use Web API authentication.

NEW QUESTION 130

You have a PostgreSQL database named db1. You have a group of data analysts that will create Power BI datasets. Each analyst will use data from a different schema in db1. You need to simplify the process for the analysts to initially connect to db1 when using Power BI Desktop. Which type of file should you use?

A. PBIT

B. PBIX

C. PBIDS

Answer: C

Explanation:

To simplify the process for the data analysts to initially connect to the PostgreSQL database (db1) when using Power BI Desktop, you should use a PBIDS (Power BI Data Source) file.

NEW QUESTION 131

HotSpot

You have a Power BI tenant that uses a primary domain named fabrikam.com and contains a Power BI Premium workspace named Workspace1. Workspace1 contains a dataset named DS1. DS1 is an imported model that has one data source. You have a guest user named [email protected] that is assigned the Contributor role for Workspace1. You need to ensure that User1 can connect to the XMLA endpoint of DS1. How should you complete the URL of the XMLA connection? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

https://learn.microsoft.com/en-us/power-bi/enterprise/service-premium-connect-tools

NEW QUESTION 132

HotSpot

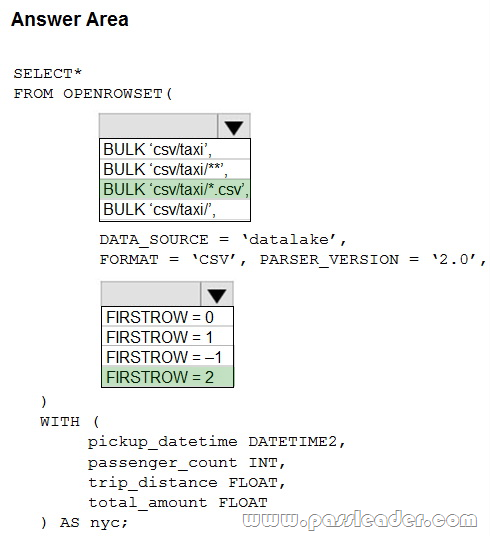

You have an Azure Synapse Analytics serverless SQL pool and an Azure Data Lake Storage Gen2 account. You need to query all the files in the ‘csv/taxi/’ folder and all its subfolders. All the files are in CSV format and have a header row. How should you complete the query? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

– BULK ‘csv/taxi/**’ : although ‘csv/taxi/*.csv’ also works (tested it), the file extension is not specified. It can be the case that the files are .txt files but still in CSV format.

– FIRSTROW=2: you need to skip the headers (tested it). FIRSTROW=1 is the default value and will return the data including the headers.

https://learn.microsoft.com/en-us/azure/synapse-analytics/sql/query-folders-multiple-csv-files#traverse-folders-recursively

NEW QUESTION 133

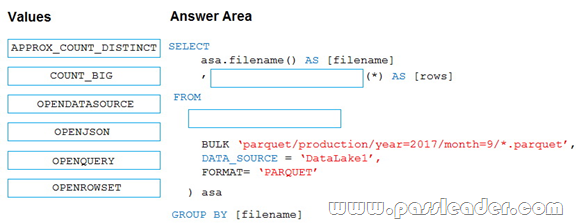

Drag and Drop

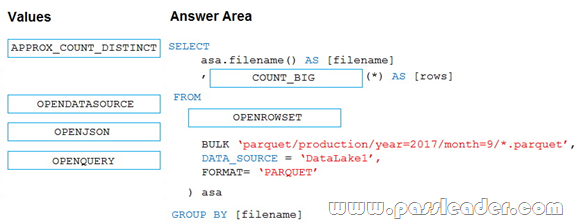

You have an Azure Synapse Analytics serverless SQL pool. You need to return a list of files and the number of rows in each file. How should you complete the Transact-SQL statement? (To answer, drag the appropriate values to the targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.)

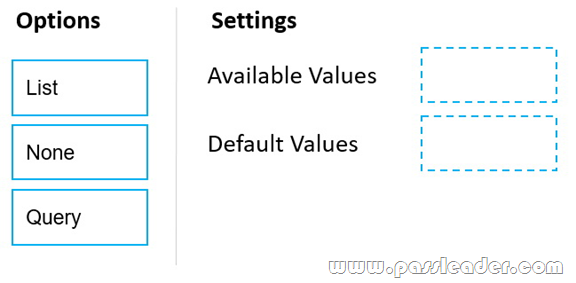

NEW QUESTION 134

Drag and Drop

You have a dataset that is populated from a list of business categories in a source database. The list of categories changes over time. You use Power BI Report Builder to create a paginated report. The report has a report parameter named BusinessCategory. You need to modify BusinessCategory to ensure that when the report opens, a drop-down list displays all the business categories, and all the business categories are selected. How should you configure BusinessCategory? (To answer, drag the appropriate options to the correct settings. Each option may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.)

Answer:

Explanation:

Requirements say, available categories change over time and all currently available categories should be default. Thus a query is needed for both.

https://learn.microsoft.com/en-us/sql/reporting-services/tutorial-add-a-parameter-to-your-report-report-builder?view=sql-server-ver16#AvailableValues

Case Study – Litware, Inc.

NEW QUESTION 135

You need to configure the Sales Analytics workspace to meet the ad hoc reporting requirements. What should you do?

A. Grant the sales managers the Build permission for the existing Power BI datasets.

B. Grant the sales managers admin access to the existing Power BI workspace.

C. Create a deployment pipeline and grant the sales managers access to the pipeline.

D. Create a PBIT file and distribute the file to the sales managers.

Answer: A

Explanation:

Template file will require data loading and will lead to separate Power BI dataset and reports. To minmize the effort, should give users build access to existing dataset.

NEW QUESTION 136

You need to recommend a solution to ensure that sensitivity labels are applied. The solution must minimize administrative effort. Which three actions should you include in the recommendation? (Each correct answer presents part of the solution. Choose three.)

A. From the Power BI Admin portal, set Allow users to apply sensitivity labels for Power BI content to Enabled.

B. From the Power BI Admin portal, set Apply sensitivity labels from data sources to their data in Power BI to Enabled.

C. In SQLDW, apply sensitivity labels to the columns in the Customer and CustomersWithProductScore tables.

D. In the Power BI datasets, apply sensitivity labels to the columns in the Customer and CustomersWithProductScore tables.

E. From the Power BI Admin portal, set Make certified content discoverable to Enabled.

Answer: ABC

Explanation:

Option D is wrong – you can’t apply sensitivity labels to columns in power bi dataset.

Option E is wrong – certification has nothing to do with sensitivity.

NEW QUESTION 137

HotSpot

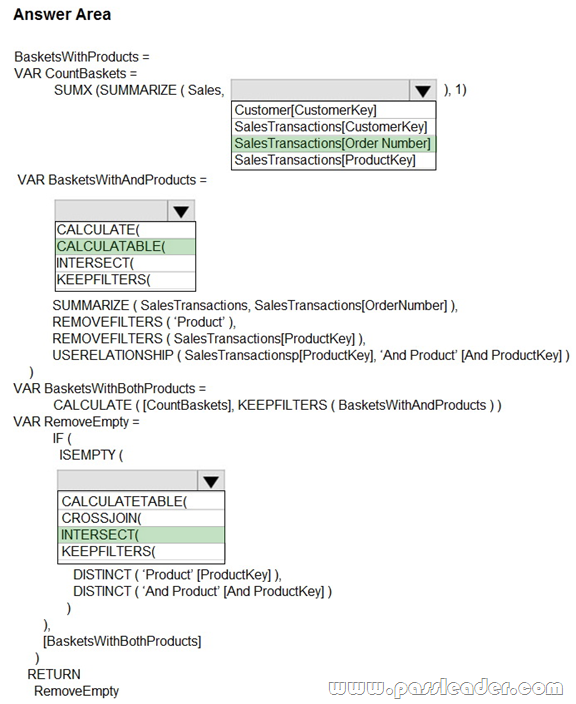

You need to create a measure to count orders for the market basket analysis. How should you complete the DAX expression? (To answer, select the appropriate options in the answer area.)

Case Study – Contoso, Ltd.

NEW QUESTION 138

You need to identify the root cause of the data refresh issue. What should you use?

A. The Usage Metrics Report in powerbi.com.

B. Query Diagnostics in Power Query Editor.

C. Performance analyzer in Power BI Desktop.

Answer: B

Explanation:

Query Diagnostics helps in understanding what Power Query is doing at authoring and at refresh time in Power BI Desktop.

https://learn.microsoft.com/en-us/power-query/query-diagnostics

NEW QUESTION 139

Which two possible tools can you use to identify what causes the report to render slowly? (Each correct answer presents a complete solution. Choose two.)

A. Synapse Studio

B. DAX Studio

C. Azure Data Studio

D. Performance analyzer in Power BI Desktop

Answer: BD

Explanation:

Performance Analyzer is built into Power BI Desktop which is used first followed by DAX Studio which is an external tool to further dig deep down.

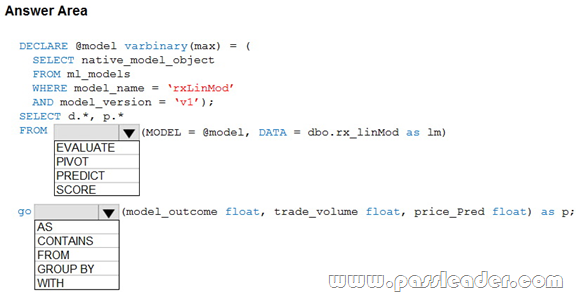

NEW QUESTION 140

HotSpot

You need to build a Transact-SQL query to implement the planned changes for the internal users. How should you complete the Transact-SQL query? (To answer, select the appropriate options in the answer area.)

NEW QUESTION 141

……

Get the newest PassLeader DP-500 VCE dumps here: https://www.passleader.com/dp-500.html (145 Q&As Dumps –> 193 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-500 PDF dumps from Cloud Storage for free: https://drive.google.com/drive/folders/1EDghX2xzyoR_nUrbUD5C2Ow5sBLIe-gh