Valid DP-200 Dumps shared by PassLeader for Helping Passing DP-200 Exam! PassLeader now offer the newest DP-200 VCE dumps and DP-200 PDF dumps, the PassLeader DP-200 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-200 dumps with VCE and PDF here: https://www.passleader.com/dp-200.html (272 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-200 dumps from Cloud Storage: https://drive.google.com/open?id=1CTHwJ44u5lT4tsb2qo8oThaQ5c_vwun1

NEW QUESTION 260

You have an Azure subscription that contains an Azure Data Factory version 2 (V2) data factory named df1. Df1 contains a linked service. You have an Azure Key vault named vault1 that contains an encryption key named key1. You need to encrypt df1 by using key1. What should you do first?

A. Disable purge protection on vault1.

B. Create a self-hosted integration runtime.

C. Disable soft delete on vault1.

D. Remove the linked service from df1.

Answer: D

Explanation:

Linked services are much like connection strings, which define the connection information needed for Data Factory to connect to external resources.

Incorrect:

Not A and C: Data Factory requires two properties to be set on the Key Vault, Soft Delete and Do Not Purge.

Not B: A self-hosted integration runtime copies data between an on-premises store and cloud storage.

https://docs.microsoft.com/en-us/azure/data-factory/enable-customer-managed-key

https://docs.microsoft.com/en-us/azure/data-factory/concepts-linked-services

https://docs.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime

NEW QUESTION 261

You have an Azure Blob storage account. Developers report that an HTTP 403 (Forbidden) error is generated when a client application attempts to access the storage account. You cannot see the error messages in Azure Monitor. What is a possible cause of the error?

A. The client application is using an expired shared access signature (SAS) when it sends a storage request.

B. The client application deleted, and then immediately recreated a blob container that has the same name.

C. The client application attempted to use a shared access signature (SAS) that did not have the necessary permissions.

D. The client application attempted to use a blob that does not exist in the storage service.

Answer: C

Explanation:

https://docs.microsoft.com/en-us/rest/api/storageservices/sas-error-codes

NEW QUESTION 262

You are monitoring an Azure Stream Analytics job by using metrics in Azure. You discover that during the last 12 hours, the average watermark delay is consistently greater than the configured late arrival tolerance. What is a possible cause of this behavior?

A. The job lacks the resources to process the volume of incoming data.

B. The late arrival policy causes events to be dropped.

C. Events whose application timestamp is earlier than their arrival time by more than five minutes arrive as inputs.

D. There are errors in the input data.

Answer: A

Explanation:

https://azure.microsoft.com/en-us/blog/new-metric-in-azure-stream-analytics-tracks-latency-of-your-streaming-pipeline/

NEW QUESTION 263

You have an Azure Blob storage account. The storage account has an alert that is configured to indicate when the Availability metric falls below 100 percent. You receive an alert for the Availability metric. The logs for the storage account show that requests are failing because of a ServerTimeoutError error. What does ServerTimeoutError indicate?

A. Read and write storage requests exceeded capacity.

B. A transient server timeout occurred while the service was moved to a different partition to load balance requests.

C. A client application attempted to perform an operation and did not have valid credentials.

D. There was excessive network latency between a client application and the storage account.

Answer: D

NEW QUESTION 264

You have a data warehouse in Azure Synapse Analytics. You need to ensure that the data in the data warehouse is encrypted at rest. What should you enable?

A. Transparent Data Encryption (TDE).

B. Secure transfer required.

C. Always Encrypted for all columns.

D. Advanced Data Security for this database.

Answer: A

Explanation:

Azure SQL Database currently supports encryption at rest for Microsoft-managed service side and client- side encryption scenarios. Support for server encryption is currently provided through the SQL feature called Transparent Data Encryption. Client-side encryption of Azure SQL Database data is supported through the Always Encrypted feature.

https://docs.microsoft.com/en-us/azure/security/fundamentals/encryption-atrest

NEW QUESTION 265

You have an Azure Data Factory that contains 10 pipelines. You need to label each pipeline with its main purpose of either ingest, transform, or load. The labels must be available for grouping and filtering when using the monitoring experience in Data Factory. What should you add to each pipeline?

A. a resource tag

B. a user property

C. an annotation

D. a run group ID

E. a correlation ID

Answer: C

Explanation:

Annotations are additional, informative tags that you can add to specific factory resources: pipelines, datasets, linked services, and triggers. By adding annotations, you can easily filter and search for specific factory resources.

https://www.cathrinewilhelmsen.net/annotations-user-properties-azure-data-factory/

NEW QUESTION 266

You have an activity in an Azure Data Factory pipeline. The activity calls a stored procedure in a data warehouse in Azure Synapse Analytics and runs daily. You need to verify the duration of the activity when it ran last. What should you use?

A. The sys.dm_pdw_wait_stats data management view in Azure Synapse Analytics.

B. An Azure Resource Manager template.

C. Activity runs in Azure Monitor.

D. Activity log in Azure Synapse Analytics.

Answer: C

Explanation:

Monitor activity runs. To get a detailed view of the individual activity runs of a specific pipeline run, click on the pipeline name.

Incorrect:

Not A: sys.dm_pdw_wait_stats holds information related to the SQL Server OS state related to instances running on the different nodes.

https://docs.microsoft.com/en-us/azure/data-factory/monitor-visually

NEW QUESTION 267

You are monitoring an Azure Stream Analytics job. The Backlogged Input Events count has been 20 for the last hour. You need to reduce the Backlogged Input Events count. What should you do?

A. Add an Azure Storage account to the job.

B. Increase the streaming units for the job.

C. Stop the job.

D. Drop late arriving events from the job.

Answer: B

Explanation:

General symptoms of the job hitting system resource limits include: If the backlog event metric keeps increasing, it’s an indicator that the system resource is constrained (either because of output sink throttling, or high CPU).

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-scale-jobs

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-monitoring

NEW QUESTION 268

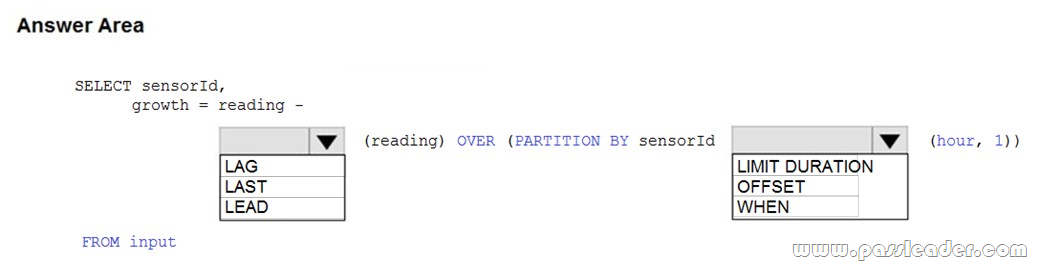

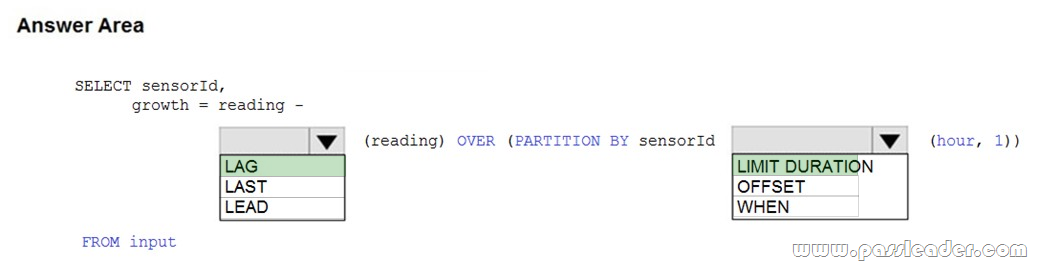

HotSpot

You are building an Azure Stream Analytics query that will receive input data from Azure IoT Hub and write the results to Azure Blob storage. You need to calculate the difference in readings per sensor per hour. How should you complete the query? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

https://docs.microsoft.com/en-us/stream-analytics-query/lag-azure-stream-analytics

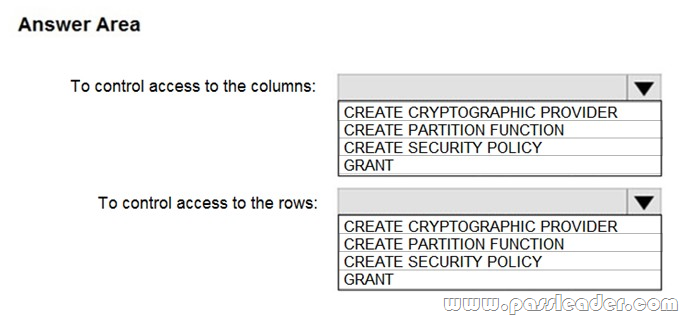

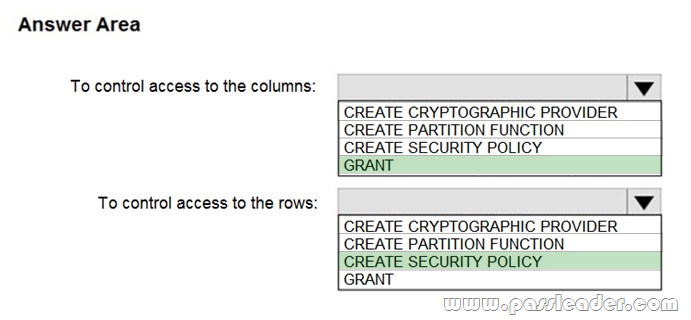

NEW QUESTION 269

HotSpot

You have an Azure subscription that contains the following resources:

– An Azure Active Directory (Azure AD) tenant that contains a security group named Group1.

– An Azure Synapse Analytics SQL pool named Pool1.

You need to control the access of Group1 to specific columns and rows in a table in Pool1. Which Transact-SQL commands should you use? (To answer, select the appropriate options in the answer area.)

Answer:

Explanation:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/column-level-security

NEW QUESTION 270

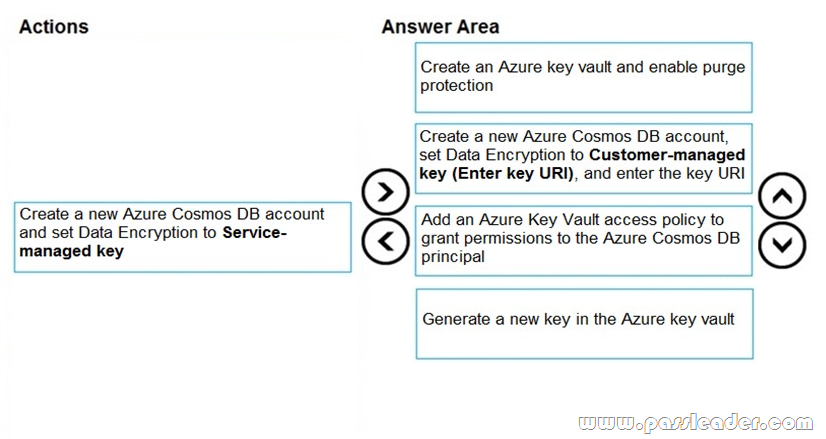

Drag and Drop

You need to create an Azure Cosmos DB account that will use encryption keys managed by your organization. Which four actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Answer:

Explanation:

Step 1: Create an Azure key vault and enable purge protection Using customer-managed keys with Azure Cosmos DB requires you to set two properties on the Azure Key Vault instance that you plan to use to host your encryption keys: Soft Delete and Purge Protection.

Step 2: Create a new Azure Cosmos DB account, set Data Encryption to Customer-managed Key (Enter key URI), and enter the key URI. Data stored in your Azure Cosmos account is automatically and seamlessly encrypted with keys managed by Microsoft (service-managed keys). Optionally, you can choose to add a second layer of encryption with keys you manage (customer-managed keys).

Step 3: Add an Azure Key Vault access policy to grant permissions to the Azure Cosmos DB principal. Add an access policy to your Azure Key Vault instance.

Step 4: Generate a new key in the Azure key vault. Generate a key in Azure Key Vault.

https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-setup-cmk

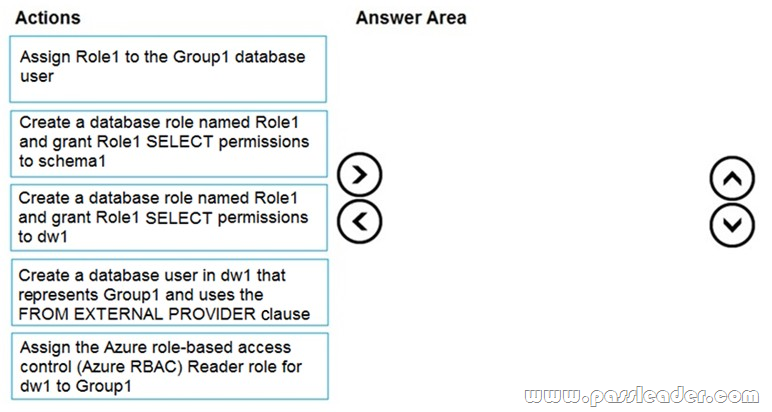

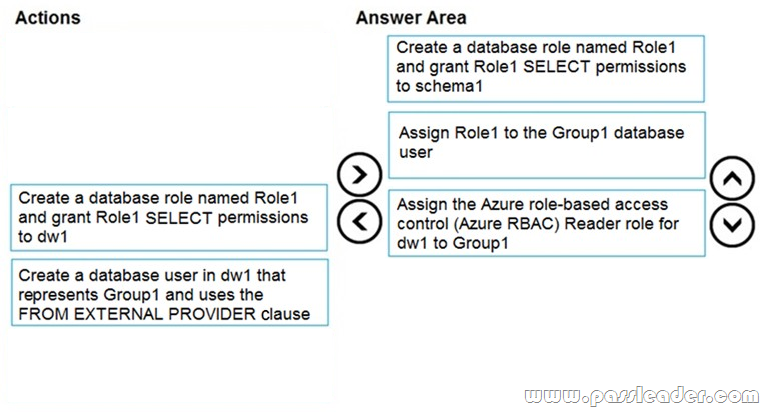

NEW QUESTION 271

Drag and Drop

You have an Azure Active Directory (Azure AD) tenant that contains a security group named Group1. You have an Azure Synapse Analytics dedicated SQL pool named dw1 that contains a schema named schema1. You need to grant Group1 read-only permissions to all the tables and views in schema1. The solution must use the principle of least privilege. Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Answer:

Explanation:

Step 1: Create a database role named Role1 and grant Role1 SELECT permissions to schema You need to grant Group1 read-only permissions to all the tables and views in schema1. Place one or more database users into a database role and then assign permissions to the database role.

Step 2: Assign Rol1 to the Group database user.

Step 3: Assign the Azure role-based access control (Azure RBAC) Reader role for dw1 to Group1.

https://docs.microsoft.com/en-us/azure/data-share/how-to-share-from-sql

NEW QUESTION 272

……

Get the newest PassLeader DP-200 VCE dumps here: https://www.passleader.com/dp-200.html (272 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-200 PDF dumps from Cloud Storage for free: https://drive.google.com/open?id=1CTHwJ44u5lT4tsb2qo8oThaQ5c_vwun1