Valid DP-203 Dumps shared by PassLeader for Helping Passing DP-203 Exam! PassLeader now offer the newest DP-203 VCE dumps and DP-203 PDF dumps, the PassLeader DP-203 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-203 dumps with VCE and PDF here: https://www.passleader.com/dp-203.html (397 Q&As Dumps –> 428 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-203 dumps from Cloud Storage: https://drive.google.com/drive/folders/1wVv0mD76twXncB9uqhbqcNPWhkOeJY0s

NEW QUESTION 261

You are working on Azure Data Lake Store Gen1. Suddenly, you realize the need to know the schema of the external data. Which of the following plug-in would you use to know the external data schema?

A. ipv4_lookup

B. mysql_request

C. pivot

D. narrow

E. infer_storage_schema

Answer: E

Explanation:

infer_storage_schema is the plug-in that helps infer the schema based on the external file contents; when the external data schema is unknown. infer_storage_schema plug-in can be used to infer the schema of external data and return it as a CSL schema string.

Incorrect:

Not A. The ipv4_lookup plugin checks for an IPv4 value in a lookup table and returns the matched rows.

Not B. The mysql_request plugin transfers a SQL query to a MySQL Server network endpoint and returns the 1st row set in the result.

Not C. Pivot plug-in is used to rotate a table by changing the unique values from 1 column in the input table into a number of different columns in the output table and perform aggregations wherever needed on any remaining column values that are desired in the final output.

Not D. This plug-in is used to unpivot a wide table into a table with only three columns.

https://docs.microsoft.com/en-us/azure/data-explorer/kusto/management/external-tables-azurestorage-azuredatalake

https://docs.microsoft.com/en-us/azure/data-explorer/kusto/query/inferstorageschemaplugin

NEW QUESTION 262

You work in Azure Synapse Analytics dedicated SQL pool that has a table titled Pilots. Now you want to restrict the user access in such a way that users in ‘IndianAnalyst’ role can see only the pilots from India. Which of the following would you add to the solution?

A. Table partitions.

B. Encryption.

C. Column-level security.

D. Row-level security.

E. Data masking.

Answer: D

Explanation:

Row-level security is applicable on databases to allow fine-grained access to the rows in a database table for restricted control upon who could access which type of data. In this scenario, we need to restrict access on a row basis, i.e. only for the pilots from India, there Row-level security is the right solution.

Incorrect:

Not A. Table partitions are generally used to group similar data.

Not B. Encryption is used for security purposes.

Not C. Column-level security is used to restrict data access at the column level. In the given scenario, we need to restrict access at the row level.

Not E. Sensitive data exposure can be limited by masking it to unauthorized users using SQL Database dynamic data masking.

https://azure.microsoft.com/en-in/resources/videos/row-level-security-in-azure-sql-database/

https://techcommunity.microsoft.com/t5/azure-synapse-analytics/how-to-implement-row-level-security-in-serverless-sql-pools/ba-p/2354759

NEW QUESTION 263

The partition specifies how Azure storage load balances entities, messages, and blobs across servers to achieve the traffic requirements of these objects. Which of the following represents the partition key for a blob?

A. account name + table name + blob name

B. account name + container name + blob name

C. account name + queue name + blob name

D. account name + table name + partition key

E. account name + queue name

Answer: B

Explanation:

For a blob, the partition key consists of account name + container name + blob name. Data is partitioned into ranges using these partition keys and these ranges are load balanced throughout the system.

Incorrect:

Not D. For an entity in a table, the partition key includes the table name and the partition key.

Not E. For a message in a queue, the queue name is the partition key itself.

https://docs.microsoft.com/en-us/azure/architecture/best-practices/data-partitioning-strategies

NEW QUESTION 264

You need to design an enterprise data warehouse in Azure SQL Database with a table titled customers. You need to ensure that the customer supportive staff can identify the customers by matching the few characters of their email addresses but the full email addresses of the customers should not be visible to them. Which of the following would you include in the solution?

A. Row-level security.

B. Encryption.

C. Column-level security.

D. Dynamic data masking.

E. Any of the above can be used.

Answer: D

Explanation:

Dynamic data masking is helpful in preventing unauthorized access to sensitive data by empowering the clients to specify how much of the sensitive data to disclose with minimum impact on the application layer. In this policy-based security feature, the sensitive data is hidden in the output of a query over specified database fields, but there is no change in the data in the database. In the given scenario, there is a need to use Dynamic data masking to limit the sensitive data exposure to non-privileged users.

Incorrect:

Not A. Row-level security is used to enable the restricted access i.e who can access what type of data.

Not C. Column-level security won’t help in limiting the exposure of sensitive data.

https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview

NEW QUESTION 265

There are a number of various analytical data stores that use different languages, models, and provide different capabilities. Which of the following is a low-latency NoSQL data store that provides a high-performance and flexible option to query structured and semi-structured data?

A. Azure Synapse analytics.

B. HBase.

C. Spark SQL.

D. Hive.

E. None of these.

Answer: B

Explanation:

HBase is a low-latency NoSQL data store that provides a high-performance and flexible option to query structured and semi-structured data. The primary data model used by HBase is the Wide column store.

Incorrect:

Not A. Azure Synapse is a managed service depending upon the SQL Server database technologies and is optimized for supporting large-scale data warehousing workloads.

Not C. Spark SQL is an API developed on Spark that enables the creation of data frames and tables which are possible to be queried using SQL syntax.

Not D. It is HBase, not Hive. That is a low-latency NoSQL data store that provides a high-performance and flexible option to query structured and semi-structured data.

https://docs.microsoft.com/en-us/azure/architecture/data-guide/big-data/batch-processing

NEW QUESTION 266

There are a number of different options for data serving storage in Azure. These options vary based on the capability they offer. Which of the below-given options don’t offer Row-level security?

A. SQL Database

B. Azure Data Explorer

C. HBase/Phoenix on HDInsight

D. Hive LLAP on HDInsight

E. Azure Analysis Services

F. Cosmos DB

Answer: BF

Explanation:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/analytical-data-stores

NEW QUESTION 267

When you implement the Clean Missing Data module to a set of data, the Minimum missing value ratio and Maximum missing value ratio are two important factors in replacing the missing values. If the Maximum missing value is set to 1, what does it mean?

A. missing values are cleaned only when 100% of the values in the column are missing

B. missing values are cleaned even if there is only one missing value

C. missing values are cleaned only when there is only one missing value

D. missing values won’t be cleaned

E. missing values are cleaned even if 100% of the values in the column are missing

Answer: E

Explanation:

Maximum missing value ratio is specified as the maximum number of missing values that can be present for the operation that is to be executed. By default, the Maximum missing value ratio is set to 1 which indicates that missing values will be cleaned even if 100% of the values in the column are missing.

Incorrect:

Not A. The use of the word “Only When” does not rightly state the meaning.

Not B. Setting Minimum missing value ratio property to 0 actually means that missing values are cleaned even if there is only one missing value.

Not C. Minimum and Maximum missing value ratios talk only about minimum and maximum ratios, not a specific number.

Not D. The given statement is not right.

https://docs.microsoft.com/en-us/azure/machine-learning/algorithm-module-reference/clean-missing-data

NEW QUESTION 268

On each file upload, Batch writes 2 log files to the compute node. These log files can be examined to know more about a specific failure. These two files are what?

A. fileuploadin.txt and fileuploaderr.txt

B. fileuploadout.txt and fileuploadin.txt

C. fileuploadout.txt and fileuploaderr.txt

D. fileuploadout.JSON and fileuploaderr.JSON

E. fileupload.txt and fileuploadout.txt

Answer: C

Explanation:

When you upload a file, 2 log files are written by Batch to the compute node, named – fileuploadout.txt and fileuploaderr.txt. These log files help to get information about a specific failure. The scenarios where file upload is not done, these fileuploadout.txt and fileuploaderr.txt log files don’t exist.

https://docs.microsoft.com/en-us/azure/batch/batch-job-task-error-checking

NEW QUESTION 269

A famous online payment gateway provider is creating a new product where the users can pay their credit card bills and earn reward coins. As part of compliance, they need to ensure that all the data, including credit card details and PIIs, are securely kept. This product is backed by a dedicated SQL pool in azure Synapse analytics. The major concern is that the database team that performs maintenance should not be able to view the customer’s info. Which of the following can be the best solution?

A. Implement Transparent data encryption.

B. Use Azure Defender for SQL.

C. Use Dynamic data masking (DDM).

D. Assign only SQL security manager role to maintenance team members.

Answer: C

Explanation:

Here there is a lot of critical data and personal information involved. Dynamic data masking is the best solution for this. Consider the case of credit card numbers; using DDM, we can actually hide the numbers in that particular column. For example, if the credit card number is 1234 5678 then the displayed value will be like XXXX XX78. Similarly, we can use masking for other data in other columns where PII is present. The maintenance team with limited permissions will only see the covered data and thus, the data is safe from exploitation.

Incorrect:

Not A. Transparent data encryption is a method used by Azure in its relational database services for encrypting data at rest. This will not be the best solution here.

Not B. Azure Defender is mainly used to mitigate potential DB vulnerabilities and detect anomalous activities.

Not D. Assigning Azure security manager role will grant them access to security features configuration, including the ability to enable or disable DDM. This is exactly the opposite of what is required here.

https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview

NEW QUESTION 270

You have a Serverless SQL pool development assigned by your company. This should follow the best practices and optimized solutions. Which of the following solutions will help you increase the performance? (Choose three.)

A. Use the same region for Azure Storage account and serverless SQL pool.

B. Convert CSV to Parquet.

C. Use CETAS.

D. Use azure storage throttling.

Answer: ABC

Explanation:

When Azure Storage account and serverless SQL pool are co-located, the latency of loading will be reduced. Thus, there will be an increase in total performance. In situations where these are in different regions, data has to travel more, increasing the latency. Parquet is columnar formats and compressed and have a smaller size than CSV. So, the time to read it will be less. CETAs are parallel operations that create external table metadata and export the result of the SELECT query to a set of files in your storage account. We can enhance the query performances.

Incorrect:

Not D. Storage throttling detection will slow down the SQL pool, and thus the performance will be decreased.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-best-practices#serverless-sql-pool-development-best-practices

NEW QUESTION 271

You have a traditional data warehouse storage with a snowflake schema with row-oriented storage that takes considerable time and low performance during queries. You plan to use clustered columnstore indexing. Will it improve query performance?

A. Yes

B. No

Answer: A

Explanation:

Most of the traditional data warehouses use row-oriented storage. But columnstore indexes are used in modern data warehouses as the standard for storage and query in big data warehousing fact tables. There are two advantages of using this while comparing with a traditional row-oriented Data warehouse:

– 10x performance in query performance

– 10x data compression

https://docs.microsoft.com/en-us/sql/relational-databases/indexes/columnstore-indexes-overview?view=sql-server-ver15

NEW QUESTION 272

A famous fintech startup is setting up its data solution using Azure Synapse analytics. As part of compliance, the company has decided that only the finance managers should be able to see the bank account number and not anyone else. Which of the following is best suited in this scenario?

A. Firewall rules to block IP.

B. Row-level security.

C. Column-level security.

D. Azure RBAC role.

Answer: C

Explanation:

Column-level security will control the access to particular columns based on the user membership. In the case of sensitive data, we can decide which user or group can access a particular column. In this question, the restriction should be given to bank account numbers. So, ideally column-level security can be used.

Incorrect:

Not A. Firewall block will completely block access to the database.

Not B. Row-level security will prevent access to row and is not required.

Not D. Azure RBAC cannot control access to a particular column.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/column-level-security

NEW QUESTION 273

A famous IOT devices company collects the metadata about its sensors in the field in reference data. Which of the following services can be used as input for this type of data? (Choose two.)

A. Azure SQL

B. Blob Storage

C. Azure Event Hub

D. Azure IOT hub

Answer: AB

Explanation:

Reference data is a fixed data set that is static or, in some cases, changes slowly. Here the metadata values of sensors are slowly changing and thus can be considered as reference data as the question tells. Azure blob storage can ingest this type of data, in which the data is modeled to be a sequence of blobs in ascending order of the date/time specified in the blob name. Similarly, Azure SQL also can intake reference data.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-use-reference-data

NEW QUESTION 274

Bryan is executing an init script which is required to run a bootstrap script during the Databricks Spark driver or worker node startup. Which kind of init script can he choose?

A. Global.

B. Job.

C. Cluster-scoped.

D. None of the above.

Answer: C

Explanation:

Cluster-scoped init script type works for every Databricks cluster configured.

Incorrect:

Not A, because the global type of init script can’t execute on model serving clusters, and it only works on clusters on the same workspace.

Not B, because there’s no init script type as Job in Databricks.

https://docs.microsoft.com/en-us/azure/databricks/clusters/init-scripts

NEW QUESTION 275

You have an Azure Synapse Analytics dedicated SQL pool named Pool1. Pool1 contains a table named table1. You load 5TB of data into table1. You need to ensure that columnstore compression is maximized for table1. Which statement should you execute?

A. ALTER INDEX ALL on table1 REORGANIZE

B. ALTER INDEX ALL on table1 REBUILD

C. DBCC DBREINOEX (table1)

D. DBCC INDEXDEFRAG (pool1,table1)

Answer: C

NEW QUESTION 276

You plan to create a dimension table in Azure Synapse Analytics that will be less than 1 GB. You need to create the table to meet the following requirements:

– Provide the fastest Query time.

– Minimize data movement during queries.

Which type of table should you use?

A. hash distributed

B. heap

C. replicated

D. round-robin

Answer: C

NEW QUESTION 277

You haw an Azure data factory named ADF1. You currently publish all pipeline authoring changes directly to ADF1. You need to implement version control for the changes made to pipeline artifacts. The solution must ensure that you can apply version control to the resources currently defined m the UX Authoring canvas for ADF1. Which two actions should you perform? (Each correct answer presents part of the solution. Choose two.)

A. Create an Azure Data Factory trigger.

B. From the UX Authoring canvas, select Set up code repository.

C. Create a GitHub action.

D. From the UX Authoring canvas, run Publish All.

E. Create a Git repository.

F. From the UX Authoring canvas, select Publish.

Answer: BD

NEW QUESTION 278

You have an Azure Synapse Analytics dedicated SQL pool. You need to Create a fact table named Table1 that will store sales data from the last three years. The solution must be optimized for the following query operations:

– Show order counts by week.

– Calculate sales totals by region.

– Calculate sales totals by product.

– Find all the orders from a given month.

Which data should you use to partition Table1?

A. region

B. product

C. week

D. month

Answer: C

NEW QUESTION 279

Nicole is working on migrating on-premises SQL Server databases to Azure SQL data warehouse (Synapse dedicated SQL pools) tables. The tables of the dedicated SQL pools of Synapse Analytics require partition. She’s designing SQL table partitions for this data migration to the Azure Synapse. The partition of the tables already contains the data, and she’s looking for the most efficient method to split the partitions in the dedicated SQL pool tables. What T-SQL statement can she use for splitting partitions that contain data?

A. CTAS

B. CETAS

C. OPENROWSET

D. Clustered Columnstore Indexes

Answer: A

Explanation:

The CTAS can be used as the most efficient method while splitting the partitions that contain data.

Incorrect:

Not B, because CETAS is used in the dedicated SQL pool of Synapse for creating External table and data export operations in parallel for Hadoop, Azure Blob Storage, and ADLS Gen2.

Not C, because the OPENROWSET function in Synapse SQL reads the content of the file(s) from a data source and returns the content as a set of rows.

Not D, because Clustered Columnstore Indexes offer both the highest level of data compression and the best overall query performance. It doesn’t help in partition splitting.

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-partition

NEW QUESTION 280

The complex event processing streaming solution which Jeffrey is working on the IoT platform, is a hybrid cloud platform where few data sources are transformed into on-premises Big Data platform. The on-premises data center and Azure services are connected via a virtual network gateway. What kind of resources can he choose for this on-premises Big Data platform connected to Azure via virtual network gateway, complex data processing and execution UDF jobs on java?

A. Spark Structured Streaming/Apache Storm.

B. Apache Ignite.

C. Apache Airflow.

D. Apache Kafka.

E. None of the above.

Answer: A

Explanation:

Spark Structure Streaming/Apache Storm can be used for complex event processing for real-time data streams on-premises.

Incorrect:

Not B, because Apache Ignite can’t help with real-time event processing with UDF jobs processing.

Not C, because Apache Airflow is a platform for programmatically authoring, monitoring workflows.

Not D, because Apache Kafka is used for publishing and consuming event streams in pub-sub scenarios.

https://docs.microsoft.com/en-us/azure/stream-analytics/streaming-technologies

NEW QUESTION 281

HotSpot

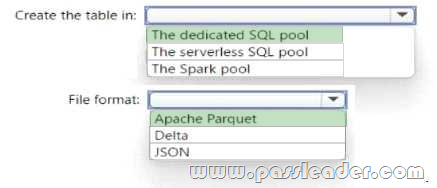

You have an Azure Synapse Analytics serverless SQL pool, an Azure Synapse Analytics dedicated SQL pool, an Apache Spark pool, and an Azure Data Lake Storage Gen2 account. You need to create a table in a lake database. The table must be available to both the serverless SQL pool and the Spark pool. Where should you create the table, and which file format should you use for data in the table? (To answer, select the appropriate options in the answer area.)

NEW QUESTION 282

Drag and Drop

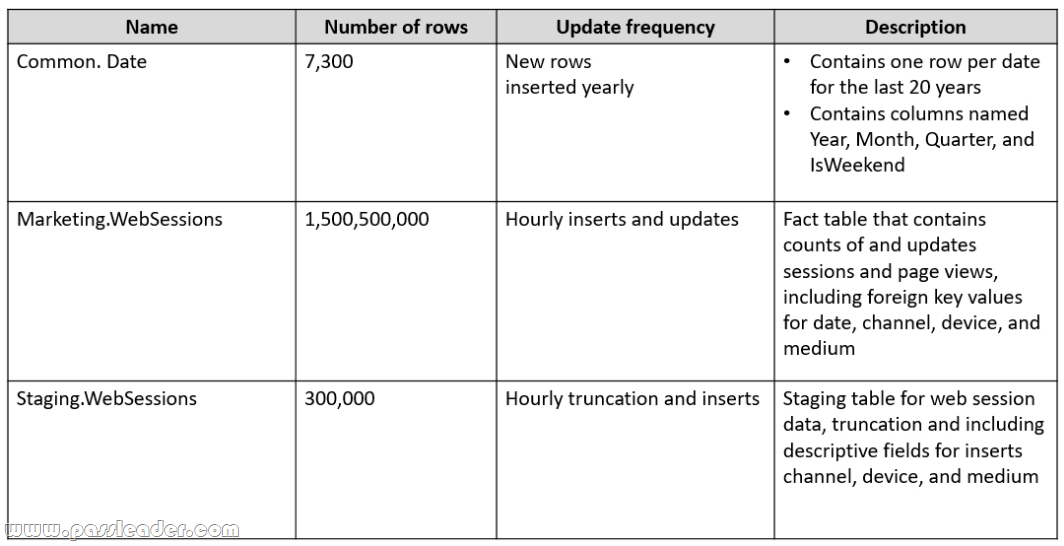

You have an Azure subscription. You plan to build a data warehouse in an Azure Synapse Analytics dedicated SQL pool named pool1 that will contain staging tables and a dimensional model Pool1 will contain the following tables:

You need to design the table storage for pool1. The solution must meet the following requirements:

– Maximize the performance of data loading operations to Staging.WebSessions.

– Minimize query times for reporting queries against the dimensional model.

Which type of table distribution should you use for each table? (To answer, drag the appropriate table distribution types to the correct tables. Each table distribution type may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.)

NEW QUESTION 283

……

Get the newest PassLeader DP-203 VCE dumps here: https://www.passleader.com/dp-203.html (397 Q&As Dumps –> 428 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-203 PDF dumps from Cloud Storage for free: https://drive.google.com/drive/folders/1wVv0mD76twXncB9uqhbqcNPWhkOeJY0s