Valid DP-420 Dumps shared by PassLeader for Helping Passing DP-420 Exam! PassLeader now offer the newest DP-420 VCE dumps and DP-420 PDF dumps, the PassLeader DP-420 exam questions have been updated and ANSWERS have been corrected, get the newest PassLeader DP-420 dumps with VCE and PDF here: https://www.passleader.com/dp-420.html (121 Q&As Dumps –> 166 Q&As Dumps)

BTW, DOWNLOAD part of PassLeader DP-420 dumps from Cloud Storage: https://drive.google.com/drive/folders/1qvgrCO1nEUsdVBxsTeTM6_ABMTnzF21L

NEW QUESTION 1

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account. You need to make the contents of container1 available as reference data for an Azure Stream Analytics job.

Solution: You create an Azure Data Factory pipeline that uses Azure Cosmos DB Core (SQL) API as the input and Azure Blob Storage as the output.

Does this meet the goal?

A. Yes

B. No

Answer: B

Explanation:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/changefeed-ecommerce-solution

NEW QUESTION 2

You plan to create an Azure Cosmos DB Core (SQL) API account that will use customer-managed keys stored in Azure Key Vault. You need to configure an access policy in Key Vault to allow Azure Cosmos DB access to the keys. Which three permissions should you enable in the access policy? (Each correct answer presents part of the solution. Choose three.)

A. Wrap Key

B. Get

C. List

D. Update

E. Sign

F. Verify

G. Unwrap Key

Answer: ABG

Explanation:

https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-setup-cmk

NEW QUESTION 3

You are troubleshooting the current issues caused by the application updates. Which action can address the application updates issue without affecting the functionality of the application?

A. Enable time to live for the con-product container.

B. Set the default consistency level of account1 to strong.

C. Set the default consistency level of account1 to bounded staleness.

D. Add a custom indexing policy to the con-product container.

Answer: C

Explanation:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

NEW QUESTION 4

You need to implement a trigger in Azure Cosmos DB Core (SQL) API that will run before an item is inserted into a container. Which two actions should you perform to ensure that the trigger runs? (Each correct answer presents part of the solution. Choose two.)

A. Append pre to the name of the JavaScript function trigger.

B. For each create request, set the access condition in RequestOptions.

C. Register the trigger as a pre-trigger.

D. For each create request, set the consistency level to session in RequestOptions.

E. For each create request, set the trigger name in RequestOptions.

Answer: CF

Explanation:

C: When triggers are registered, you can specify the operations that it can run with.

F: When executing, pre-triggers are passed in the RequestOptions object by specifying PreTriggerInclude and then passing the name of the trigger in a List object.

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/how-to-use-stored-procedures-triggers-udfs

NEW QUESTION 5

You have an application named App1 that reads the data in an Azure Cosmos DB Core (SQL) API account. App1 runs the same read queries every minute. The default consistency level for the account is set to eventual. You discover that every query consumes request units (RUs) instead of using the cache. You verify the IntegratedCacheiteItemHitRate metric and the IntegratedCacheQueryHitRate metric. Both metrics have values of 0. You verify that the dedicated gateway cluster is provisioned and used in the connection string. You need to ensure that App1 uses the Azure Cosmos DB integrated cache. What should you configure?

A. the indexing policy of the Azure Cosmos DB container

B. the consistency level of the requests from App1

C. the connectivity mode of the App1 CosmosClient

D. the default consistency level of the Azure Cosmos DB account

Answer: C

Explanation:

Because the integrated cache is specific to your Azure Cosmos DB account and requires significant CPU and memory, it requires a dedicated gateway node. Connect to Azure Cosmos DB using gateway mode.

https://docs.microsoft.com/en-us/azure/cosmos-db/integrated-cache-faq

NEW QUESTION 6

You need to select the partition key for con-iot1. The solution must meet the IoT telemetry requirements. What should you select?

A. the timestamp

B. the humidity

C. the temperature

D. the device ID

Answer: D

Explanation:

https://docs.microsoft.com/en-us/azure/architecture/solution-ideas/articles/iot-using-cosmos-db

NEW QUESTION 7

You have an Azure Cosmos DB Core (SQL) API account that is used by 10 web apps. You need to analyze the data stored in the account by using Apache Spark to create machine learning models. The solution must NOT affect the performance of the web apps. Which two actions should you perform? (Each correct answer presents part of the solution. Choose two.)

A. In an Apache Spark pool in Azure Synapse, create a table that uses cosmos.olap as the data source.

B. Create a private endpoint connection to the account.

C. In an Azure Synapse Analytics serverless SQL pool, create a view that uses OPENROWSET and the CosmosDB provider.

D. Enable Azure Synapse Link for the account and Analytical store on the container.

E. In an Apache Spark pool in Azure Synapse, create a table that uses cosmos.oltp as the data source.

Answer: AD

Explanation:

https://github.com/microsoft/MCW-Cosmos-DB-Real-Time-Advanced-Analytics/blob/main/Hands-on%20lab/HOL%20step-by%20step%20-%20Cosmos%20DB%20real-time%20advanced%20analytics.md

NEW QUESTION 8

You are implementing an Azure Data Factory data flow that will use an Azure Cosmos DB (SQL API) sink to write a dataset. The data flow will use 2,000 Apache Spark partitions. You need to ensure that the ingestion from each Spark partition is balanced to optimize throughput. Which sink setting should you configure?

A. Throughput.

B. Write throughput budget.

C. Batch size.

D. Collection action.

Answer: C

Explanation:

Batch size: An integer that represents how many objects are being written to Cosmos DB collection in each batch. Usually, starting with the default batch size is sufficient. To further tune this value, Note: Cosmos DB limits single request’s size to 2MB. The formula is “Request Size = Single Document Size * Batch Size”. If you hit error saying “Request size is too large”, reduce the batch size value. The larger the batch size, the better throughput the service can achieve, while make sure you allocate enough RUs to empower your workload.

Incorrect:

Not A: Throughput: Set an optional value for the number of RUs you’d like to apply to your CosmosDB collection for each execution of this data flow. Minimum is 400.

Not B: Write throughput budget: An integer that represents the RUs you want to allocate for this Data Flow write operation, out of the total throughput allocated to the collection.

Not D:

– Collection action: Determines whether to recreate the destination collection prior to writing.

– None: No action will be done to the collection.

– Recreate: The collection will get dropped and recreated.

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-cosmos-db

NEW QUESTION 9

You need to identify which connectivity mode to use when implementing App2. The solution must support the planned changes and meet the business requirements. Which connectivity mode should you identify?

A. Direct mode over HTTPS

B. Gateway mode (using HTTPS)

C. Direct mode over TCP

Answer: C

Explanation:

https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-configure-private-endpoints

NEW QUESTION 10

You configure multi-region writes for account1. You need to ensure that App1 supports the new configuration for account1. The solution must meet the business requirements and the product catalog requirements. What should you do?

A. Set the default consistency level of accountl to bounded staleness.

B. Create a private endpoint connection.

C. Modify the connection policy of App1.

D. Increase the number of request units per second (RU/s) allocated to the con-product and conproductVendor containers.

Answer: D

Explanation:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

NEW QUESTION 11

You need to provide a solution for the Azure Functions notifications following updates to conproduct. The solution must meet the business requirements and the product catalog requirements. Which two actions should you perform? (Each correct answer presents part of the solution. Choose two.)

A. Configure the trigger for each function to use a different leaseCollectionPrefix.

B. Configure the trigger for each function to use the same leaseCollectionName.

C. Configure the trigger for each function to use a different leaseCollectionName.

D. Configure the trigger for each function to use the same leaseCollectionPrefix.

Answer: AB

Explanation:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-cosmosdb-v2-trigger

NEW QUESTION 12

HotSpot

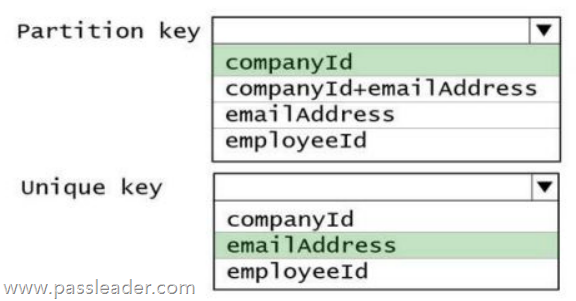

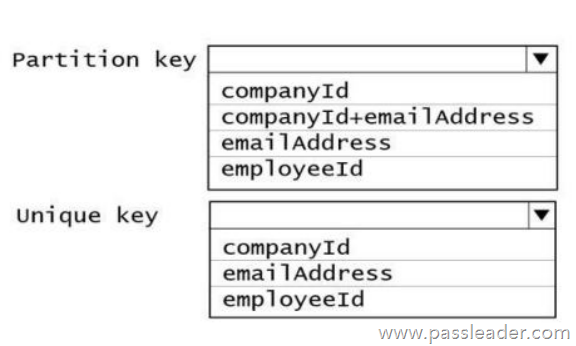

You have a database in an Azure Cosmos DB Core (SQL) API account. You plan to create a container that will store employee data for 5,000 small businesses. Each business will have up to 25 employees. Each employee item will have an emailAddress value. You need to ensure that the emailAddress value for each employee within the same company is unique. To what should you set the partition key and the unique key? (To answer, select the appropriate options in the answer area.)

Explanation:

https://docs.microsoft.com/en-us/azure/cosmos-db/unique-keys

NEW QUESTION 13

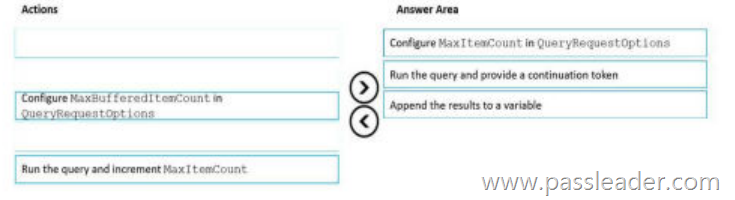

Drag and Drop

You have an app that stores data in an Azure Cosmos DB Core (SQL) API account The app performs queries that return large result sets. You need to return a complete result set to the app by using pagination. Each page of results must return 80 items. Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Explanation:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/sql-query-pagination

NEW QUESTION 14

……

Get the newest PassLeader DP-420 VCE dumps here: https://www.passleader.com/dp-420.html (121 Q&As Dumps –> 166 Q&As Dumps)

And, DOWNLOAD the newest PassLeader DP-420 PDF dumps from Cloud Storage for free: https://drive.google.com/drive/folders/1qvgrCO1nEUsdVBxsTeTM6_ABMTnzF21L